Intro

- Docker is an open source containerization tool, that lets you run your application in an isolated environment called containers.

- Containers included:

- code

- Configs

- Runtime: containerd, CRI-O

- Environment

- Libraries

- Docker install on top of O/S, images of the applications are created with the help of Dockerfile and applications willl be deployed on containers using these images. This images have all required softwares & tools as a package.

- Challenges with Traditional Software Deployment Approaches.

- Before Docker, the software lifecycle involved several manual and error-prone processes:

- 1. Development:

- Developers manually installed required software and dependencies on their machines.

- Teams wrote lengthy setup instructions to replicate environments for development, testing, and production.

- 2. Testing:

- Test environments were manually configured to resemble production systems, but differences in configuration often caused problems.

- Even minor inconsistencies led to bugs that only appeared in production, making testing unreliable.

- 3. Deployment:

- Applications were packaged into .tar.gz files or .zip archives.

- Scripts or manual processes were used to copy files, install dependencies, and configure services—leading to human errors and delays.

- Challenges with Traditional apprach:

- Different operating systems, libraries, and configurations across environments lead to inconsistencies.

- Example: Development uses Python 3.4, but production runs Python 3.1, causing compatibility issues.

- Running multiple Virtual Machines (VMs) for different applications increases overhead.

- Hosting multiple applications on a single VM leads to resource contention.

- Manual configurations across development, testing, and production environments introduce human errors.

- Each environment may require different settings, leading to deployment failures.

- Applications running on the same VM can conflict due to shared dependencies, ports, or configurations.

- Example: Two applications requiring different versions of the same library can lead to unexpected failures.

- Solution? → Docker Containers

- How docker works? workflow with docker:

- Docker Images: The blueprints for containers, including code and dependencies.

- Docker Containers: The runnable instances created from images.

- Docker Registries: Storage locations for Docker images, such as Docker Hub.

- Docker Engine: The core component that powers Docker.

- Example: secretsanta

- The secretsanta is a java based application, to run the this application, it requires the following environment.

- Operating System:

- linux o/s,

- Dependencies:

- jdk

- convert code into artifact:

- Install maven

- Application run on port:

- 8080

- Package: To create package from the code: maven( package to build artifact(.jar))

- Deploy package: run command java-jar secretsanta-0.0.1-SNAPSHOT.jar or to run on different port java -Dserver.port=8999 -jar secretsanta-0.0.1-SNAPSHOT.jar.

- Access application:

- open browser and run http://ip:8080 or http://ip:8999

- Operating System:

- The above application will only run when the above environment is met. It can not run on any other environment.

- If the application need to run on different environemnt like windows machine than it will fail. we need to create a computer or VM with the windows o/s and install all dependencies.

- Docker has provided the solution to run the application on different environemnt using light weight images of o/s and dependencies.

- Docker will create the image using Dockerfile in which define all these requirements/dependecies like o/s, jdk, port and command java-jar app.jar

- Updload this image to docker hub where customer will download and run the image. When it runs it will create a container and install all required dependencies defined in dockerfile while creating image.

- check project section for all the steps.

- The secretsanta is a java based application, to run the this application, it requires the following environment.

- Containers are fast to create and use less hardware resources as it uses light weight applications.

- Application can migrate from one o/s to another o/s by simply copying application. suppose an application (oracle) is installed on windows and want to migrate to my SQL on linux which can be done by simply copying docker container (oracle) to docker mySQL on linux.

- Cgroup (Control group): limit the use of resources like cpu, memory utilization in container. In operating system a service called cgroup which control the limitation of utilization of resources at kernel level.

- Container runtime: it is a plugin who manages or control the container. commands through CLI interacts with runtime.

- dockerd

- containerd

- cri-o

Docker Installation: Docker Engine & Docker Desktop: it comes in 2 flavours, community edition (free) & enterprise edition (paid).

Docker Engine on Ubuntu / CentOS RHEL

- Method 1: Docker engine installation: Create a Ubuntu VM

- Check ubuntu version:

- $lsb_release -a

- Check if docker is installed:

- $docker --version (specific version display)

- $docker version (detailed version display)

- $docker info (full detailed version display)

- Root Access: Docker commands run with root privileges: Get root access

- $sudo su - (root user access)

- $whoami (root)

- $apt install docker.io (Install docker from docker repository)

- Error: E: Package 'docker.io' has no installation candidate: ubuntu package was not updated.

- $apt-get update

- $sudo apt install docker.io

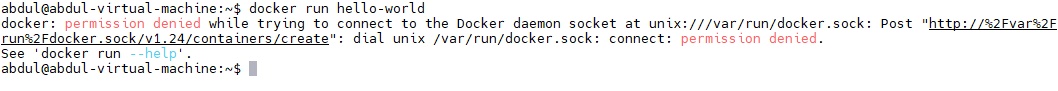

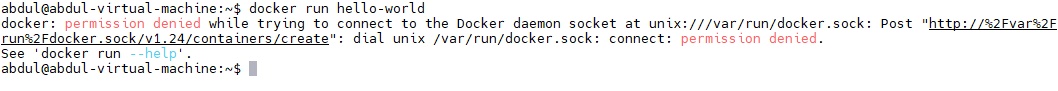

- Run hello-world to check docker installation:

- $docker pull hello-world (only root user can access docker, assign permission to user)

- When you install docker a group is created with the name docker, whoever added to this group can run the docker commands, by default root user is added.

- When you create a user a group is also created with the same name of user, this group is primary group to this user. you can add additional group to this user

- Add user (abdul) to docker group:

- $sudo usermod -aG docker abdul (-a is used to assign as a secondary group, -A is use for primary group, G is used for group, docker is group, abdul is user), log off and log on. or run $newgrp docker (it will add user to docker)

- $sudo reboot or $newgrp docker

- sudo chmod 666 /var/run/docker.sock

- Start docker Service:

- $systemctl start docker or

- $sudo service docker start

- $systemctl enable docker

- $docker info

- Check docker status:

- $sudo docker service status

- Check ubuntu version:

- Method 2: Docker engine installation through script:

- Create Ubuntu instance (t2 micro)

- $sudo su - (root user access)

- $curl -fsSL https://get.docker.com -o get-docker.sh

- $ls

- $sh get-docker.sh

- $docker --version

- Check docker status:

- $sudo docker service status

- Create Ubuntu instance (t2 micro)

- Method 3: Install Docker from apt Repository

- Install Docker using apt Repository

- Create a ubuntu VM

- Step1: Updating the software Repository: (you can switch to root user and perform the installation)

- $sudo apt-get update

- Step 2: Download Dependencies:

- Allow your Ubuntu 20.04 system to access the Docker repositories over HTTPS by running.

- $sudo apt-get install apt-transport-https ca-certificates curl software-properties-common

- The above-mentioned command:

- Gives the package manager permission to transfer files and data over https.

- Allows the system to check security certificates.

- Installs curl, a a tool for transferring data.

- Adds scripts for managing software.

-

Step 3: Add Docker's official GPG key:

$sudo install -m 0755 -d /etc/apt/keyrings

$sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

$sudo chmod a+r /etc/apt/keyrings/docker.asc# Add the repository to Apt sources:

$echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "${UBUNTU_CODENAME:-$VERSION_CODENAME}") stable" | \

$sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

$sudo apt-get update - Step 4: Installing the Docker Repository: (docker -ce = docker community edition)

- $sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

- Step 5: Enable Docker Service:

- $sudo systemctl start docker

- $sudo systemctl enable docker

- $sudo systemctl status docker

- Step 6: Verify that the installation is successful by running the

hello-worldimage:- $docker --version

- $docker version (detailed)

- $docker info (detailed)

- #sudo docker run hello-world

- Add user permission to execute docker comamnd (it is a normal user):

- $sudo usermod -aG docker username

- $newgrp docker (changes take affect immediately or restart machine)

- $sudo chmod 666 /var/run/docker.sock

- Check docker status:

- $systemctl status docker

- Removing Docker:

- $sudo apt-get remove docker docker-engine docker.io

- Installing Docker from Default Repositories:

- Step1: Updating the local repository:

- $sudo apt update

- Step2: Installing Docker

- $sudo apt install docker.io

- Step3: Check Docker Installation

- $docker --version

- $docker version

- $docker info

- Check docker status:

- $sudo docker service status

- Add user permission to execute docker comamnd (it is a normal user):

- $sudo usermod -aG docker username

- $newgrp docker (changes take affect immediately or restart machine)

- sudo chmod 666 /var/run/docker.sock

- Step1: Updating the local repository:

- Docker Engine Installation on CentOS:

- $docker --version

- $sudo yum check-update

- $yum install docker* -y

- $systemctl start docker

- $systemctl enable docker

- $docker info

- Check docker status:

- $sudo docker service status

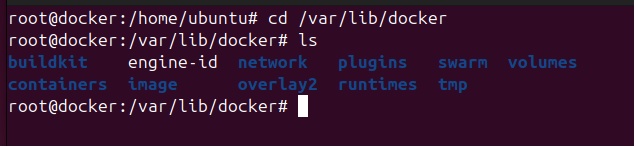

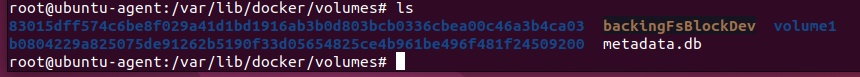

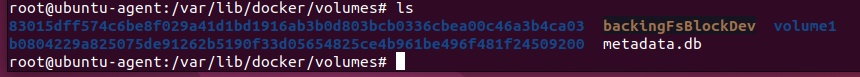

- Location where docker is installed:

- $root@docker:~# docker info | grep -i root

Docker Root Dir: /var/lib/docker

- $root@docker:~# docker info | grep -i root

- Method 1: Docker engine installation: Create a Ubuntu VM

Docker Desktop on Windows / Mac & Linux

- https://docs.docker.com/docker-for-windows/install/

- Once docker is installed it activates hyper-v and you cannot run another hypervisor.

- Once it is installed use powershell to run docker commands

- Windows:

- Download Docker Desktop Installer .exe (https://docs.docker.com/docker-for-windows/install/)

- Run Docker Desktop Installer.exe

- Default path C:\Program Files\Docker\Docker

- If your administrator account is different to your user account, you must add the user to the docker-users group:

- Run Computer Management as an administrator.

- Navigate to Local Users and Groups > Groups > docker-users.

- Right-click to add the user to the group.

- Sign out and sign back in for the changes to take effect.

- Install from Command Line:

- "Docker Desktop Installer.exe" install

- If you’re using PowerShell you should run it as:

- Start-Process 'Docker Desktop Installer.exe' -Wait install

- If using the Windows Command Prompt:

- start /w "" "Docker Desktop Installer.exe" install

- WSL:

- ubuntu:

- Install Docker Desktop on Ubuntu.

- Download DEB package.

- Install gnome terminal:

- $sudo apt install gnome-terminal

- Uninstall the tech preview or beta version of Docker Desktop for linux:

- $sudo apt remove docker-desktop

- Install the package using apt repository:

- $sudo apt-get update

- Change the directory to Download as DEB package is there.

- $cd Download

- root@master:/home/abdul/Downloads# sudo apt-get install ./docker-desktop-amd64.deb

- By default, Docker Desktop is installed at /opt/docker-desktop

- Launch Docker Desktop.

- Navigate to the Docker Desktop application in your Gnome/KDE Desktop. (click show all application and look docker desktop and click)

- docker_desktop1.jpg

- Select Docker Desktop to start Docker. The Docker Subscription Service Agreement displays.

- Select Accept to continue. Docker Desktop starts after you accept the terms.

- Note that Docker Desktop won't run if you do not agree to the terms. You can choose to accept the terms at a later date by opening Docker Desktop.

- Navigate to the Docker Desktop application in your Gnome/KDE Desktop. (click show all application and look docker desktop and click)

- Alternatively, open a terminal and run:

- $systemctl --user start docker-desktop

- Docker Desktop Removal:

- Ubuntu:

- $sudo apt remove docker-desktop

- This removes the Docker Desktop package itself but doesn’t delete all of its files or settings.

- Manually remove leftover file.

- $rm -r $HOME/.docker/desktop

- $sudo rm /usr/local/bin/com.docker.cli

- $sudo apt purge docker-desktop

- This removes configuration and data files at $HOME/.docker/desktop, the symlink at /usr/local/bin/com.docker.cli, and purges the remaining systemd service files.

- Windows:

- From GUI:

- From the Windows Start menu, select Settings > Apps > Apps & features.

- Select Docker Desktop from the Apps & features list and then select Uninstall.

- Select Uninstall to confirm your selection.

- From the CLI:

- Locate the installer C:\Program Files\Docker\Docker\Docker Desktop Installer.exe

- Uninstall Docker Desktop:

- in powershell: $Start-Process 'Docker Desktop Installer.exe' -Wait uninstall

- In the command prompt: start /w "" "Docker Desktop Installer.exe" uninstallMac

- After uninstalling Docker Desktop, some residual files may remain which you can remove manually. These are:

- C:\ProgramData\Docker

C:\ProgramData\DockerDesktop

C:\Program Files\Docker

C:\Users\<your user name>\AppData\Local\Docker

C:\Users\<your user name>\AppData\Roaming\Docker

C:\Users\<your user name>\AppData\Roaming\Docker Desktop

C:\Users\<your user name>\.docker

- C:\ProgramData\Docker

- From GUI:

- Debian

- $sudo apt remove docker-desktop

- Manually remove leftover file.

- $rm -r $HOME/.docker/desktop

$sudo rm /usr/local/bin/com.docker.cli

$sudo apt purge docker-desktop

- Mac:

- From the GUI:

- Open Docker Desktop.

- In the top-right corner of the Docker Desktop Dashboard, select the Troubleshoot icon.

- Select Uninstall.

- When prompted, confirm by selecting Uninstall again.

- From the CLI:

- /Applications/Docker.app/Contents/MacOS/uninstall

- After uninstalling Docker Desktop, some residual files may remain which you can remove:

- rm -rf ~/Library/Group\ Containers/group.com.docker

rm -rf ~/.docker

- rm -rf ~/Library/Group\ Containers/group.com.docker

- With Docker Desktop version 4.36 and earlier, the following files may also be left on the file system. You can remove these with administrative privileges:

- /Library/PrivilegedHelperTools/com.docker.vmnetd

/Library/PrivilegedHelperTools/com.docker.socket

- /Library/PrivilegedHelperTools/com.docker.vmnetd

- From the GUI:

- Ubuntu:

- Code

Docker Engine Vs Docker Desktop

- Docker Engine: is the core of Docker. It is a client-server application with these major components:

- A server which is a type of long-running program called a daemon.

- A REST API which specifies interfaces that programs can use to talk to the daemon and instruct it what to do.

- A command line interface (CLI) client.

- Docker Engine takes in commands from the Docker client, and manages Docker objects like images, containers, networks, and volumes. It’s also responsible for building and running Docker containers.

- Docker Desktop: is an application for MacOS and Windows machines for the building and sharing of containerized applications. It includes Docker Engine, Docker CLI client, Docker Compose, and Kubernetes. It provides a graphical user interface (GUI) to work with Docker, which makes it more user-friendly than using the Docker Engine alone. With Docker Desktop, you can easily start, stop, and manage Docker containers, and it comes preconfigured to work with your local system.

- Code

- Docker Engine: is the core of Docker. It is a client-server application with these major components:

Where docker is installed: location of docker

- $root@docker:~# docker info | grep -i root

Docker Root Dir: /var/lib/docker - Code

- $root@docker:~# docker info | grep -i root

User Permission

- In Linux System:

- When you install docker a group is created with the name docker, whoever added to this group can run the docker commands, by default root user is added.

- $cat /etc/group : it will list all groups

- When you create a user a group is also created with the same name of user, this group is primary group to this user. you can add additional group to this user

- $sudo usermod -aG docker abdul (-a is used to assign as a secondary group, -A is use for primary group, G is used for group, docker is group, abdul is user), log off and log on. or run $newgrp docker (it will add user to docker)

- Create a user and set password and PasswordAuthentication set to yes

- Create a group #sudo groupadd docker

- add user to the group #sudo usermod -aG docker username

- or

- $sudo chmod 666 /var/run/docker.sock (all the users of the computer will have access to run docker commands)

- $sudo systemctl restart docker

- In Windows

- If your administrator account is different to your user account, you must add the user to the docker-users group:

- Run Computer Management as an administrator.

- Navigate to Local Users and Groups > Groups > docker-users.

- Right-click to add the user to the group.

- Sign out and sign back in for the changes to take effect.

- If your administrator account is different to your user account, you must add the user to the docker-users group:

- code

- In Linux System:

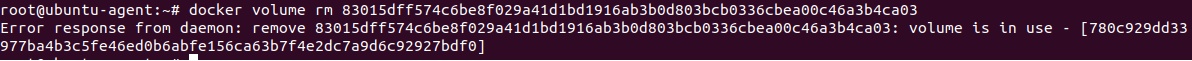

Troubleshooting

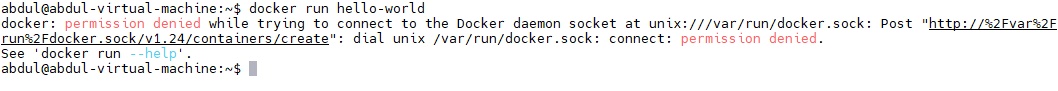

- Got permission denied while trying to connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

- Solution 1:

- Run docker command as "sudo"

- $sudo docker ....

- This allows to run any docker command as administrator. This error comes if you run the command other than root and does not have required permission.

- Add your user to the Docker Group:

- $sudo usermod -aG docker $USER (It gives user permission to file unix:///var/run/docker.sock)

- $newgrp docker (no need to restart)

- Solution 1:

- Failed to start docker.service:

- root@master:~# systemctl start docker

- Failed to start docker.service: Unit docker.service not found.

- $sudo apt-get install docker-ce

- $systemctl start docker

- Control-Plane node status is Not Ready after KinD restart, same IP is assigned to control-plane and worker node,

- $systemctl stop docker

- $systemctl start docker

- $systemctl enable docker

- $init 6 (reboot)

- code

- Got permission denied while trying to connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

Node JS on Ubuntu

- Method 1: Using snap package manager (it will be easy to upgrade/downgrade versions)

- In google search snap nodejs and click the link

- click on install button on top right corner.

- copy the command:

- $sudo snap install node --classic (select version to install and copy the command)

- $sudo snap install node --channel=23/stable --classic

- $node --version

- $npm --version

- To upgrade/downgrade, click drop down button and select version, copy command and run in ubuntu

- Instead of install you type refresh.

- $sudo snap refresh node --channel=21/stable --classic (downgrading to 21)

- $sudo snap refresh node --classic (latest version will install)

- Method 2: go to node js official page: nvm (node version manager)

- click download and select the required version and o/s using nvm

- click copy to clipboard and paste it in ubuntu and install

- Method 1: Using snap package manager (it will be easy to upgrade/downgrade versions)

Commands used in Docker Container, Images, Host, Client, Daemon, volume storage

Container

- Containers can be created using image, and images are created using o/s, dependency, libraries, etc..

- Using the image n number of containers can be created.

- Custom image: After required customization and configuration of container, you can create custom image.

- $docker container --help

- attach Attach local standard input, output, and error streams to a running container

- commit Create a new image from a container's changes

- cp Copy files/folders between a container and the local filesystem

- create Create a new container

- diff Inspect changes to files or directories on a container's filesystem

- exec Execute a command in a running container

- export Export a container's filesystem as a tar archive

- inspect Display detailed information on one or more containers

- kill Kill one or more running containers

- logs Fetch the logs of a container

- ls List running containers

- ls -a List stoped, exit containers

- pause Pause all processes within one or more containers

- port List port mappings or a specific mapping for the container

- prune Remove all stopped containers

- rename Rename a container

- restart Restart one or more containers

- rm Remove one or more containers

- run Create and run a new container from an image

- start Start one or more stopped containers

- stats Display a live stream of container(s) resource usage statistics

- stop Stop one or more running containers

- top Display the running processes of a container

- $docker top container_ID

- unpause Unpause all processes within one or more containers

- update Update configuration of one or more containers

- wait Block until one or more containers stop, then print their exit codes

- Commands

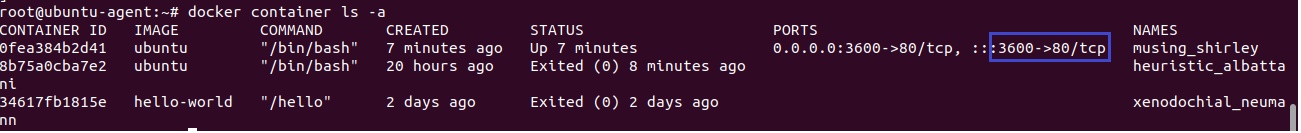

Create Container $docker run -d --name xyz -p 9000:9000 image_name:tag create container using image in detach mode, using port 9000 with define version, two ports defined, first port is container port and second port is host port, container is installed within host docker. options used with run command --name give a name to container -it open interactive terminal in the container -d running container in detached/background mode -e pass an environment variable tag version of image -v attach an external directory or device as a volume --volume-from sharing volumes between containers -rm delete a container on exit -p port mapping , container port with host port, ex -p 8080:80 (8080 is host port) -P capital P, used for automatic port mapping, container port mapped with host port greater than 30000 --link linking of containers ctrl+p, ctrl+q To come out of a running container without exit List Containers $docker container ls or docker ps list of running container $docker container ls -a or ps -a List of all containers including running and stopped container docker container inspect cont_name / cont_id To get detailed information about container Start / Stop restart Container docker container start cont_name / cont_id To start a stopped container docker container stop cont_name / cont_id To stop a started container docker container stop $(docker ps -aq) To stop all running containers docker container restart cont_name / cont_id To restart a container docker container restart -t 10 cont_name / cont_id To restart after 10 seconds Delete Container docker container rm cont_name / cont_id To delete a stopped container docker container rm -f cont_name / cont_id To delete a running container forcefully docker container rm $(docker ps -aq) To delete all stopped containers docker container rm -f $(docker ps -aq) To delete all running and stopped containers Logs docker logs cont_name / cont_id To get logs of a container View open Ports docker port cont_name / cont_id To view ports open on a container Port Mapping #docker container run -it -p 3600:80 ubuntu /bin/bash It will create container with port 3600 mapped - List of containers:

- $docker container ls (list of running containers)

- $docker container ls -a (list of all containers running & stopped)

- $docker ps (list of all containers running)

- $docker ps -a (list to stopped containers)

- location of containers: can view with root account, or it will give permission denied error

- root@docker:/var/lib/docker/containers

- Delete containers:

- $docker container rm container_ID (it will delete stopped container)

- $docker container rm -f container_ID (it will delete container forcefully, if running)

- $docker rm -f $(docker ps -aq) delete all container running/stopped in quite mode.

- Create container using raw image: you must define -it

- $docker run -itd --name=web5 ubuntu

- $docker ps

- 692749995bce ubuntu "/bin/bash" 5 seconds ago Up 4 seconds web5

- As we know there is no processes running in raw image, to keep alive we run -it (interactive terminal), it runs a process which keep container alive otherwise container get exited.

- $docker top web5 (there is /bin/bash process is running)

- $docker run -d --name=web6 ubuntu

- $docker ps (there is nothing to run in the container and it gets exited, so we use --it, so terminal runs and a process runs in container which keep container alive)

- To login to raw container

- $docker attach web6

- come out of container without exiting

- $ctrl p q

- Create container using service image: you can define -d only

- $docker run -d --name=web1 nginx

- should give -d (in detached mode means do not run on current terminal), --name=web1 (container name) nginx (image name)

- location of containers: can view with root account, or it will give permission denied error

- root@docker:/var/lib/docker/containers (you will see long container IDs)

- process in linux system (host machine processes):

- creation of container is a process in linux

- $ps -ef (it will show a process is created for container)

- container_process1.jpg

- container process id = 8881

- If you kill process 8881 than container get stopped.

- $kill -9 8881

- program which runs terminal:

- $echo $SHELL (show interactive shell)

- /bin/bash (bash is the terminal program on which you enter commands)

- Login to container terminal

- $docker exec -it container_name bash

- you will get into bash terminal of container

- Resources of container created:

- mount

- vNIC

- process

- filesystem

- Processes of container: find out from outside the container

- $docker top container_name/ID

- Create container:

- $docker container run ubuntu

- It will create container but exited the terminal after creation.

- $docker run ubuntu sleep 60

- It will create container and occupy the terminal for 60 seconds, you cannot run any commands on this terminal.

- open another terminal and run $watch docker container ls

- $docker run -d --name=web2 ubuntu

- It will run in detach mode.

- $docker run -it --name=web3 ubuntu

- It will run in interactive mode, means ubuntu will be running continuously in the background.

- $docker run -it --name=web4 ubuntu /bin/bash

- It will run in interactive mode, enter into ubuntu terminal.

- $docker run -it --name=webserver1 ubuntu /bin/bash

- it will give container name webserver1

- $docker container run ubuntu

- Details of container:

- $docker inspect container_name/ID

- Private IP is assigned "172.17.0.2" it is assigned by bridge network

- $docker network ls

- $docker network inspect bridge | grep -i subnet ("172.17.0.0/16")

- Get container statistics (cpu, memory usage ):

- $docker stats container_name

- Stop container:

- $docker container stop container_ID

- Stop all running containers:

- $docker stop $(docker ps -aq)

- Start container:

- $docker container start container_ID

- Restart container:

- $docker container restart container_ID

- Restart container after 10 seconds

- $docker restart -t 10 cont_name / cont_id

- Come out of container:

- if you run exit you will come out of container terminal but container will get stop.

- cntrl p than ctrl q

- Get into container:

- $docker container attach container_ID/name

- $docker exec -it container_ID/Container_name /bin/bash

- root@jfjfov98234:/#ls

- bin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr varbin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

- The above looks like folders of linux O/S, but this is the foot print of O/S taken from raw image. These folders container minimal required files.

- The following are ubuntu O/S files:

- bin boot dev home lib64 lost+found mnt proc run sbin.usr-is-merged srv sys usr bin.usr-is-merged cdrom etc lib lib.usr-is-merged media opt root sbin snap swap.img tmp var

- Remove container: running container will not remove, stop first then remove

- $docker container rm container_ID

- $docker container rm container_ID container_ID

- Remove all unused containers, volumes, images

- $docker system prune -a --volumes -f

- Remove container forecefully: running container will also be removed

- $docker container rm -f container_ID

- Delete all stopped containers:

- $docker rm $(docker ps -aq)

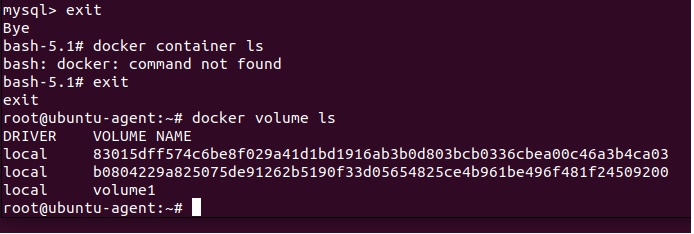

- Delete cache

- $docker builder prune -a

- Delete volume

- $docker volume prune -a

- Define hostname, DNS while creating container:

- $docker container run -it --hostname webserver --dns 8.8.8.8 ubuntu:14.04

- $hostname

- $ifconfig

- $cat /etc/resolv.conf

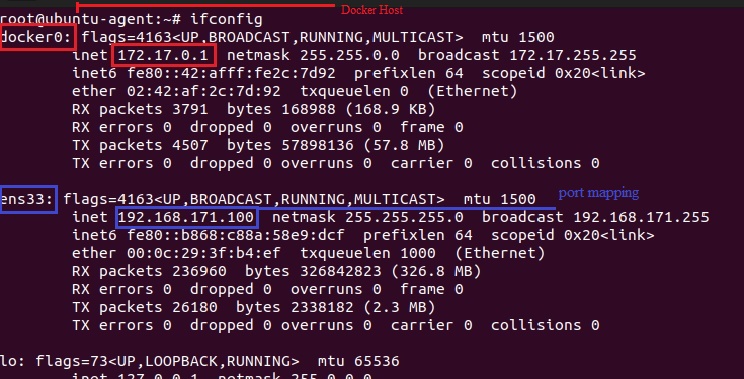

- Port Mapping:

- When you install docker on host VM is called docker host, a docker ethernet (docker0) is also created on docker host and assign a IP address.

- Ip's to containers are assigned from this pool. Application running on container/IP can be access from docker host only.

- To access the application from outside the docker host configure port mapping.

- Perform the port mapping with docker host main ethernet (ens33) ip address (192.168.171.100), Internet is working on this host.

- Assign port while creating container: ubuntu container is creating and deploying apache2

- $root@ubuntu-agent:~# docker container run -it -p 3600:80 ubuntu /bin/bash

- root@0fea384b2d41:/# apt-get update (you are in ubuntu container terminal with /bin/bash)

- root@0fea384b2d41:/# apt-get install apache2

- root@0fea384b2d41:/# service start httpd

- root@0fea384b2d41:/# cd /etc/www/html

- root@0fea384b2d41:/var/www/html# echo "Welcome to star Distributors / Port Mapping " > index.html

- root@0fea384b2d41:/var/www/html# service apache2 start

- Access the application from outside:

- $root@ubuntu-agent:~#ifconfig

- copy IP address of host (ens33), in real time will take public IP address.

- open browser: http://192.168.171.100:3600

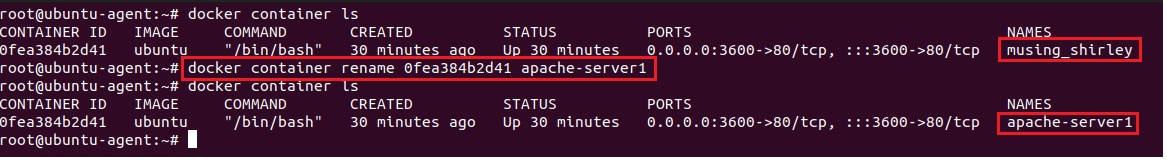

- Rename:

- $docker container rename container_ID newname

- root@ubuntu-agent:~# docker container rename 0fea384b2d41 apache-server1

- Get into container/get container bash terminal

- $docker exec -it container_name/ID /bin/bash

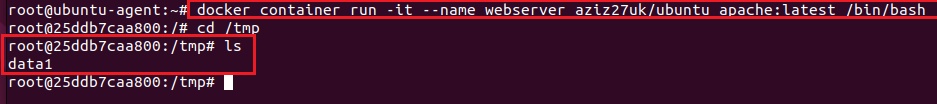

- Copy: copy file to a container

- create a file

- $root@ubuntu-agent:~#echo "some text" > data1

- $root@ubuntu-agent:~#docker container cp data1 container_ID:/tmp/ (copy file in a container's /tmp/ folder)

- Check in container's /tmp/ folder

- Kill: Forcefully stop the container.

- root@ubuntu-agent:~#docker conainer kill container_ID/name

- Wait: Block until one or more containers stop, then print their exit codes

- root@ubuntu-agent:~#docker container wait container_ID/name

- Pause / unpase:

- root@ubuntu-agent:~#docker container pause container_ID/name

- root@ubuntu-agent:~#docker conainer ls

- root@ubuntu-agent:~#docker container unpause container_ID/name

- Prune:

- $docker contianer prune (Remove all stopped containers)

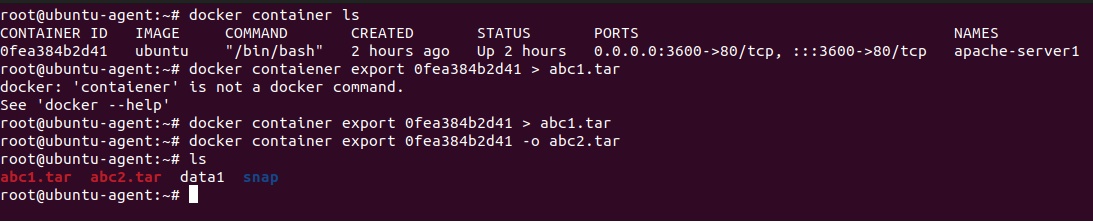

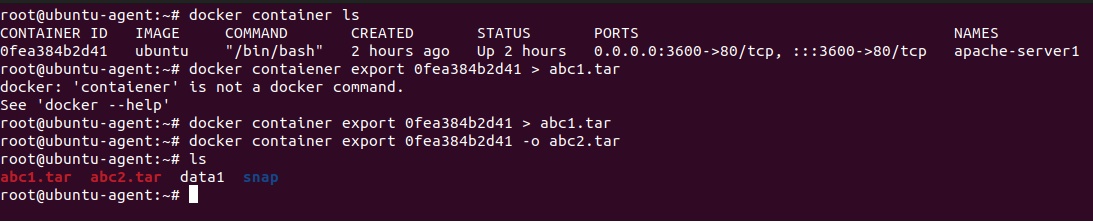

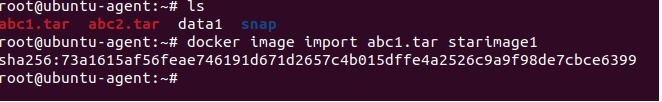

- Export:

- Exporting container which creates .tar file

- root@ubuntu-agent:~# docker contaiener export 0fea384b2d41 > abc1.tar (instead of > you can use -o )

- root@ubuntu-agent:~# docker contaiener export 0fea384b2d41 -o abc2.tar

- root@ubuntu-agent:~#ls (abc1.tar and abc3.tar created).

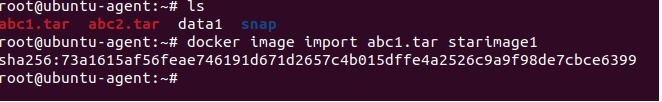

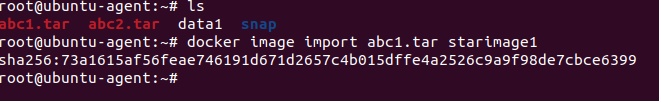

- Create image by importing the .tar file and deploy.

- root@ubuntu-agent:~#docker image import abc1.tar starimage1 (image with the name starimage1 will be created)

- root@ubuntu-agent:~# docker image ls

- root@ubuntu-agent:~# docker container run -it starimage1 /bin/bash

- Exporting container which creates .tar file

- Commit:

- Creating image of running container:

- root@ubuntu-agent:~#docker container commit container_ID image_name

- Limit memory and cpu to be used by a contianer:

- root@ubuntu-agent:~#root@ubuntu-agent:~# docker run -it --memory="512m" --cpus="1.5" ubuntu:1 (image ubuntu:1 is located at current path)

- root@ubuntu-agent:~# docker stats container_ID (to check memory utilization)

- Check utilization:

- root@ubuntu-agent:~# docker stats --no-stream

- Create a container with a specific user, using user ID

- root@ubuntu-agent:~#docker run -u 1001:1001 ubuntu:1

- Read only container, no modification is allowed inside container:

- root@ubuntu-agent:~# docker container run --read-only -it -d -p 5000:80 ubuntu:1 (container is read only).

- root@ubuntu-agent:~# docker exec -it be495dd5a482 /bin/bash

- root@be495dd5a482:/# mkdir abc

- mkdir: cannot create directory 'abc': Read-only file system

- Give permission on /tmp folder to make changes:

- root@ubuntu-agent:~# docker container run --read-only -v /tmp --tmpfs /tmp -it -d -p 5000:80 ubuntu:1

- root@ubuntu-agent:~# docker exec -it ca621efbd40b /bin/bash

- root@ca621efbd40b:/# cd /tmp

- root@ca621efbd40b:/tmp# mkdir test (it creaes test folder)

- Find out how many times countainer restart:

- root@ubuntu-agent:~# docker inspect ca621efbd40b | grep -i restartcount

- "RestartCount": 0,

- Health Check:

- Clone Healthcheck repository: https://github.com/AbdulAziz-uk/Healthcheck-docker-demo.git

- root@ubuntu-agent:~# git clone https://github.com/AbdulAziz-uk/Healthcheck-docker-demo.git

- root@ubuntu-agent:~/Healthcheck-docker-demo# ls

- open dockerfile:

-

# Base imageFROM node:18-alpine

# Set workdirWORKDIR /app

# Copy app filesCOPY server.js .

# Install curl and expressRUN apk add --no-cache curl && npm install express

# Expose portEXPOSE 8080

# Add healthcheckHEALTHCHECK CMD curl --fail http://localhost:8080/health || exit 1

# Run appCMD ["node", "server.js"] - Build image:

- root@ubuntu-agent:~/Healthcheck-docker-demo# docker build -t healthcheck .

- Deploy image:

- root@ubuntu-agent:~/Healthcheck-docker-demo# docker run -d healthcheck

-

- root@ubuntu-agent:~/Healthcheck-docker-demo#docker container ls (check stauts = healthy)

- Check logs from host machine without going inside container:

- create a folder:

- root@ubuntu-agent:~# mkdir logs

- Create a nginx container and point to logs folder of ngnix logs

- root@ubuntu-agent:~# docker run -v $(pwd)/logs:/var/log/nginx nginx

- root@ubuntu-agent:~#ls (two log files has been created for the nginx container)

- create a folder:

- Tail logs of the container:

- Tail last 20 lines of the log of the container:

- root@ubuntu-agent:~# docker logs -f --tail 2 ca621efbd40b

- Find out a specific process is running inside container:

- root@ubuntu-agent:~# docker exec -it ca621efbd40b sh -c "ps aux |grep java" (check java is running inside container)

- Container with restriction max process = 100, read only mode, memory = 256m

- root@ubuntu-agent:~# docker run -it --pids-limit 100 --memory=256m --read-only ubuntu:1 /bin/bash

- Try to create a file (error: read only file system)

- Multi-Container Link:

- docker --link

- docker compose

- docker networking

- python script

- Scenario 1:

- Step1: #docker run --name bb1 -it busybox

- to come out of interactive terminal without exit/stop container, ctrl p than ctrl q

- Step2: #docker run --name bb2 -it --link bb1:bb1link busybox

- ping bb1 (You are terminal of bb2 and pinging to bb1)

- To ping bb1 to bb2

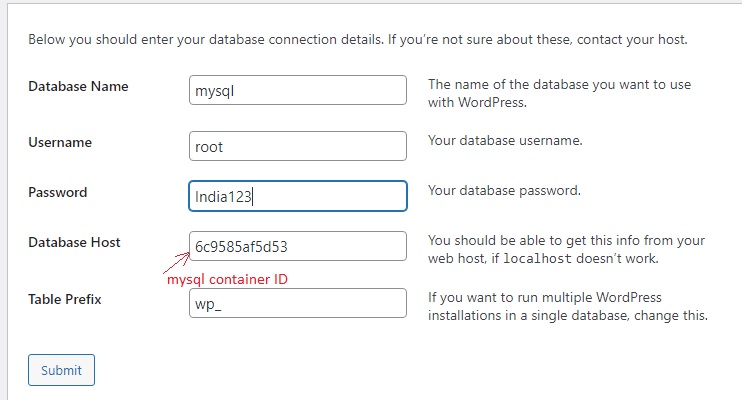

- Scenario 2:

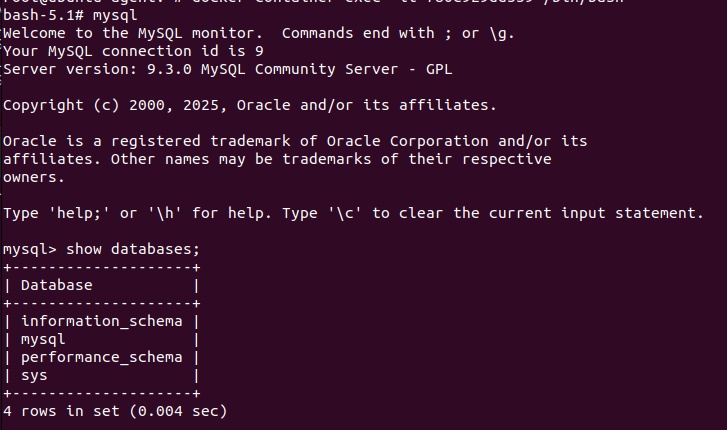

- Create 2 containers, mySQL and wordpress, create link betwen them.

- wordpress is a php based application used by developers to create a website and it is integrated with database to store clients input.

- Step1: #docker run --name mysql -d -e MYSQL_ROOT_PASSWORD=India123 mySQL:5

- hub.docker.com search mysql, click mysql and in description check einvirnment variable defined.

- click tag to get the versions of mySQL, in the above we are using tag/version 5

- Get into mySQL container:

- #docker exec -it mysql bash

- Connect to mySQL (check description in mySQL for commands)

- #mysql -u username -p

- you wil get mysql prompt

- mysql> show databases; (it will show databases information_schema, mysql, performance_schema, sys)

- mysql> use sys;

- create a database (google.com search emp and dept table for mysql(justinsomnia) and get the code

- copy the emp and dept code and paste it so it will create two tables.

- mysql> select * from emp;

- mysql> select * from dept;

- Step 2: create wordpress container and linked with mysql (define port as wordpress can be accessed on browser)

- #docker run --name starwordpress -d -p 9090:80 --link mysql:mysqldatabase wordpress

- Access wordpress in any browser http://publicIP:9090

- Database information provide

- Code

- Scenario3: Create CICD 3 tier architecture environment in docker container.

- Step1: create jenkins container

- $docker run -- name development -d -p 5050:8080 jenkins/jenkins

- Access with any browser http://publicIP:5050

- To get password, get into jenkins

- $docker exec -it development bash

- $cat /var/jenkins_home/secrets/initialAdminPassword

- Step2: Create tomcat container and linked with jenkins (development)

- #docker run --name testing -d -p 6060:8080 --link development:jenkins tomcat:9.0

- Step3: create tomcat container and liniked with jenkins

- #docker run --name production -d -p 7070:8080 --link development:jenkins tomcat:9.0

- #docker container ls (3 conainers running, 1 jenkins and 2 tomcat)

- check testing server on any browser http://publicIP:6060

- check testing server on any browser http://publicIP:7070

- Error: Description The origin server did not find a current representation for the target resource or is not willing to disclose that one exists or 404 error

- Resolve:

- Get into tomcat container #docker exec testing -it bash

- #cd /usr/local/tomcat

- #cp -r webapps.dis/* webapps

- Now access the tomcat in the browser.

- Step1: create jenkins container

- Scenario4: Create jenkins master and jenkins slave: Refer here

- Step1: #docker run --name master -d -p 6060:8080 jenkins/jenkins

- Step2: #docker run --name slave -it --link master:jenkins ubuntu

- Step3: download slave.jar file from master.

- #wget master:6060/jnlpJars/slave.jar (wget command not found) install wget

- #apt -get update

- #apt-get install wget

- #wget master:8080/jnlpJars/slave.jar

- Step4: Login to Jenkins and install plugin docker

- manage jenkins > manage plugin > Available > docker

Images

- Images: It is a combination of binaries and libraries which are required for an application/software to run. Docker has packaged important/required binaries and libraries of software and called it a docker image. You install that image in a container.

- Types of Images:

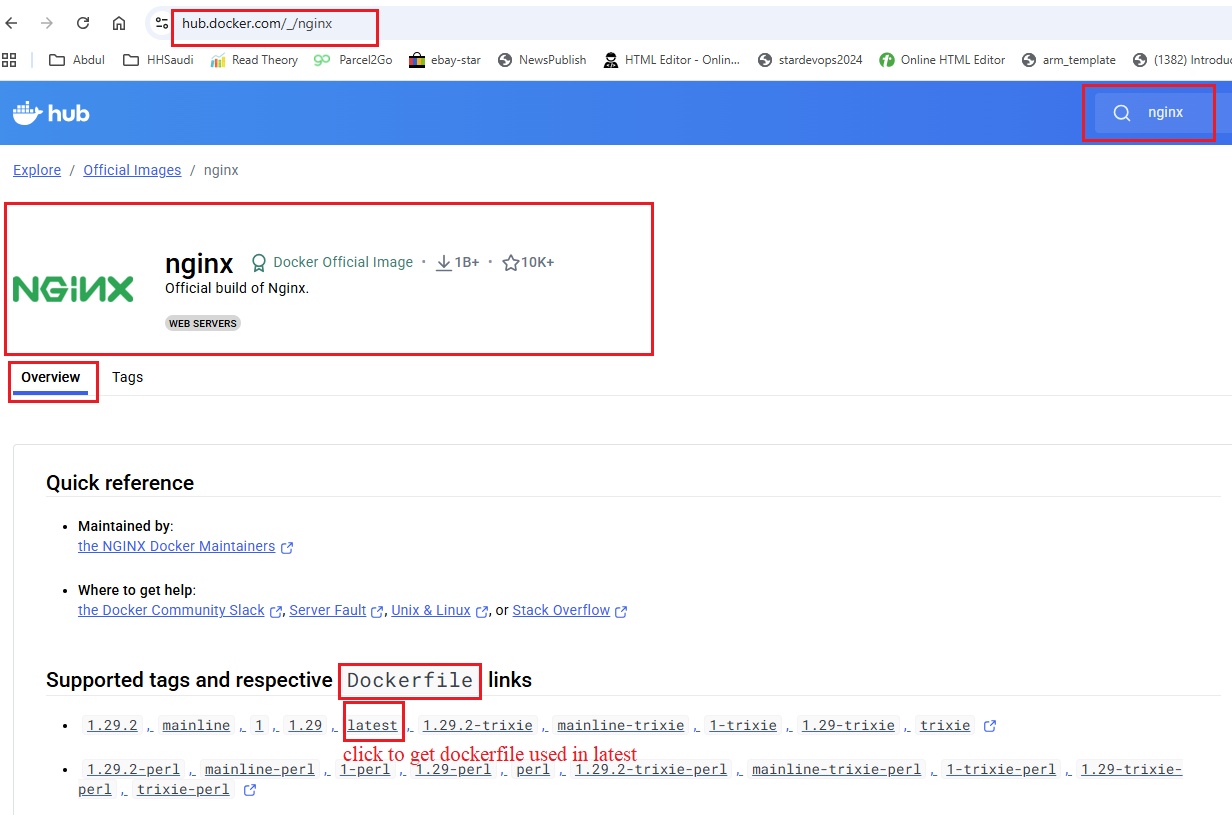

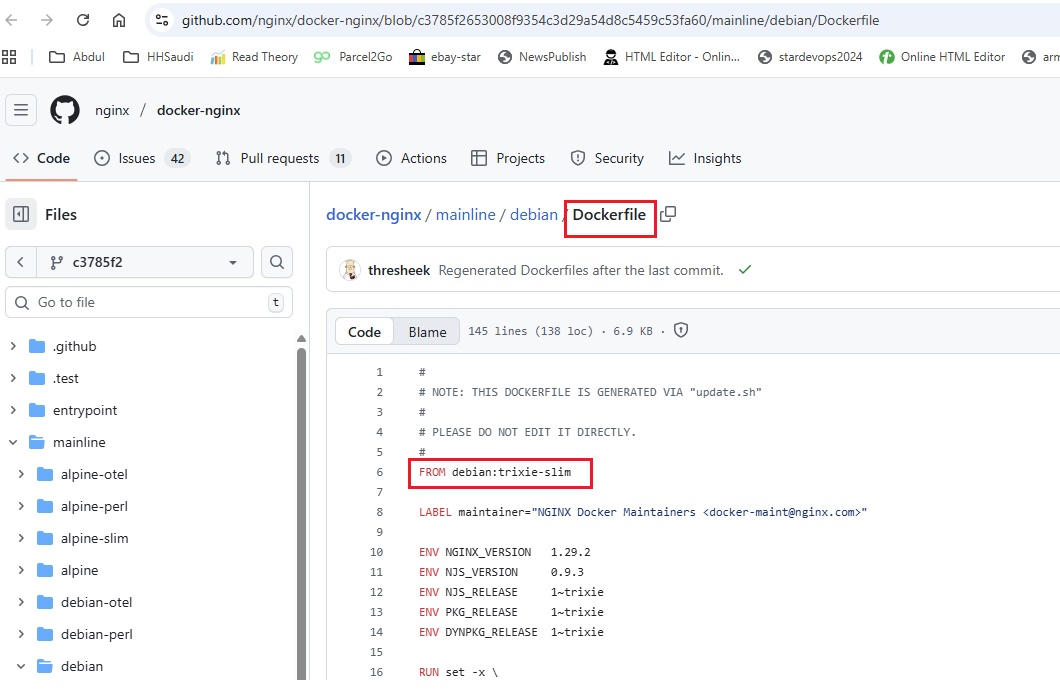

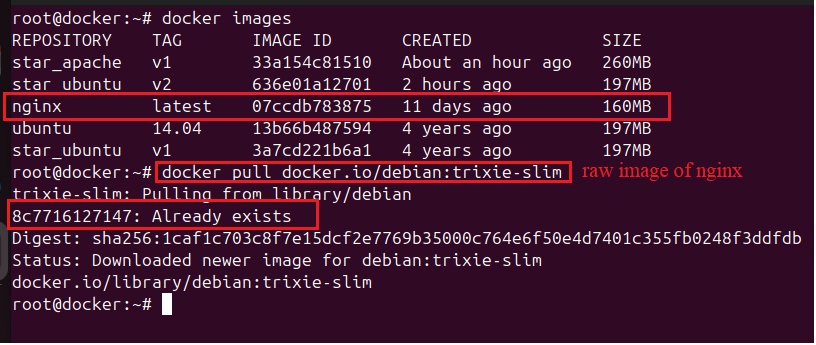

- Raw Image: O/S minor footprint: search nginx in docker hub, click any image and it will open in github where you can see FROM xxxx

- Service Images: Full fledge O/S or software (it includes raw image)

- $docker pull docker.io/ubuntu

- Ubuntu image contains raw or minor footprint of ubuntu, it contain basic commands which is required to maintain application, like cd command, ls command etc.

- raw_image1.jpg

- It will open github dockerfile, check FROM

- raw_image2.jpg

- if you try to download the raw image, it gives the message that image is already downloaded, it is inside ubuntu image.

- raw_image3.jpg

- List of images:

- $docker images (list of images in local repository )

- $docker system df (show docker disk usage)

- $docker system info

- Search image:

- $docker search docker.io/nginx

- Go to docker.io in browser and search nginx

- Parent/child image:

- when you have service image you cannot delete raw image.

- Pull nginx image: It will pull from docker.io if you do not mention registry name.

- Pull nginx image from docker.io repository:

- $docker pull nginx:latest

- we have not defined url for this repository, it will go to hub.docker.com registry (docker.io) and search for nginx image with latest version and download.

- you can define repository

- $docker pull docker.io/nginx:latest

- $docker images

- location of image on docker host: /var/lib/docker/image/overlay2/imagedb/content/sha256/

- Pull sonarqube lts-community image

- $docker pull sonarqube:lts-community (imagename:tag, where tag is used for version, it will check the local repository and if not found then go to docker repository)

- location of image on docker host: /var/lib/docker/image/overlay2/imagedb/content/sha256/

- Delete dangle images:

- Delete cache of dangle images:

- $docker builder prune

- Delete cache of dangle & active images:

- $docker builder prune -a

- Delete dangle images and dangle image cache to make space.

- $docker system df (it will show the space utilization)

- Pull jenkins image from jenkins community

- search images in jenkins community

- $docker search jenkins/jenkins

- pull image from jenkins community

- $docker pull jenkins/jenkins

- search images in docker.io community

- $docker search docker.io/jenkins

- download from docker community

- $docker pull docker.io/jenkins/jenkins

-

Image $docker image --help help on image command $docker pull Image_name:tag Download a docker image $docker search image_name search a docker image $docker list images or $docker image ls List of all docker images $docker image ls -a List all images with space used $docker image rm image_ID Delete image $docker image rm -f image_ID Delete image forcefully $docker push image_name or $docker push image_Id Upload docker image $docker rmi image_name Delete a single docker image $docker system prune -a Delete all images $docker commit container_name/container_id image_name To create a docker image from container $docker build -t image_name . To create a docker image from dockerfile $docker inspect image_name get detailed information of image $docker image save image_nae tarfile_name To save an image as tar file $docker image load tarfile to extract an image from tar file $docker container commit container_ID image_name Create image with the running container. - Lab: pull base image, Build an custom image, push and pull image

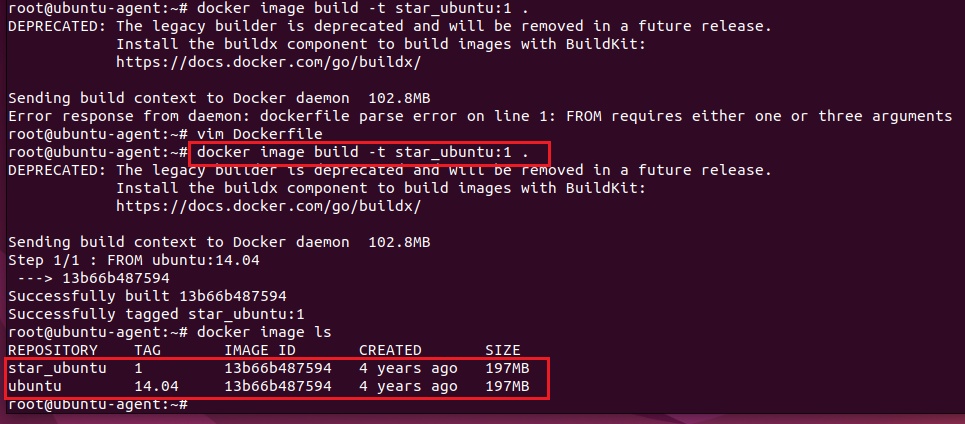

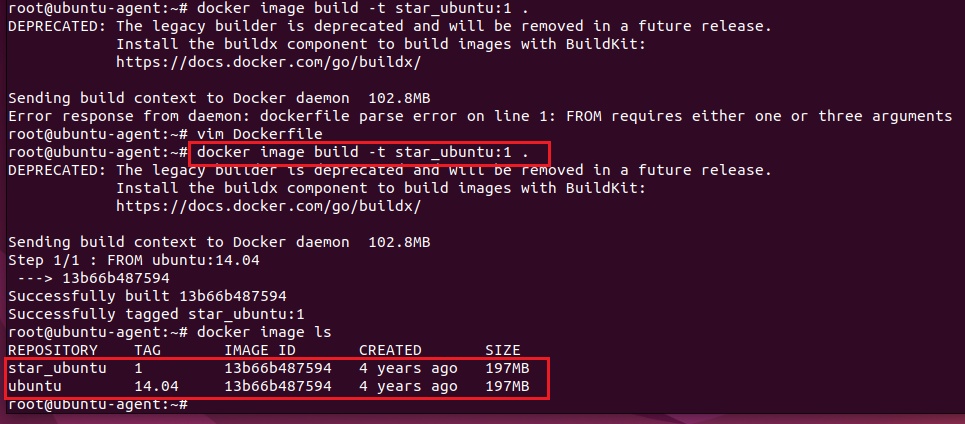

- Pull ubuntu image from docker:

- Creating a custom image of ubuntu with docker file.

- create a Dockerfile

- $vim Dockerfile (if you use different name than at the time of deployment you use -f filename), enter the following code

-

FROM ubuntu:14.04 #FROM is use to define base image, save & exit

- Build Image:

- $docker build -t star_ubuntu:1 . (t is for tag, . dot means Dockerfile is at current path) dot has been given at the end to define Dockerfile is at current path, if used custom name than we should define -f filename

- custom ubuntu image with the name star_ubuntu:1 has been created.

- both IMAGE ID are same as we have not made any changes or not added any new layer while building custom image so there is no additional layer in the image.

- create a Dockerfile

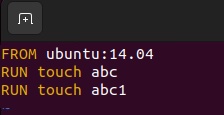

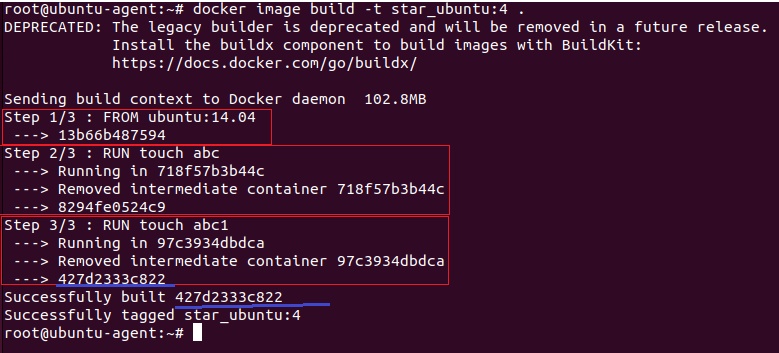

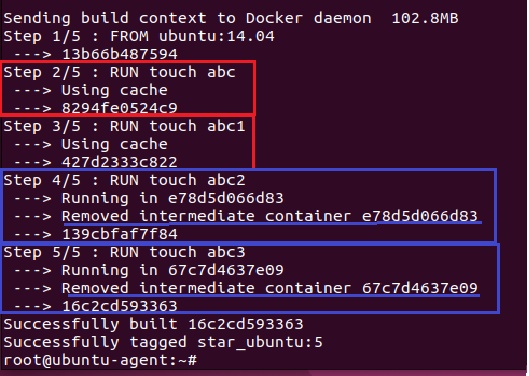

- Layers in Docker file:

- Every step in Dockerfile which takes space is consider as layer, suppose downloading it required space which is consider as layer. cd (change directory) is not consider as layer.

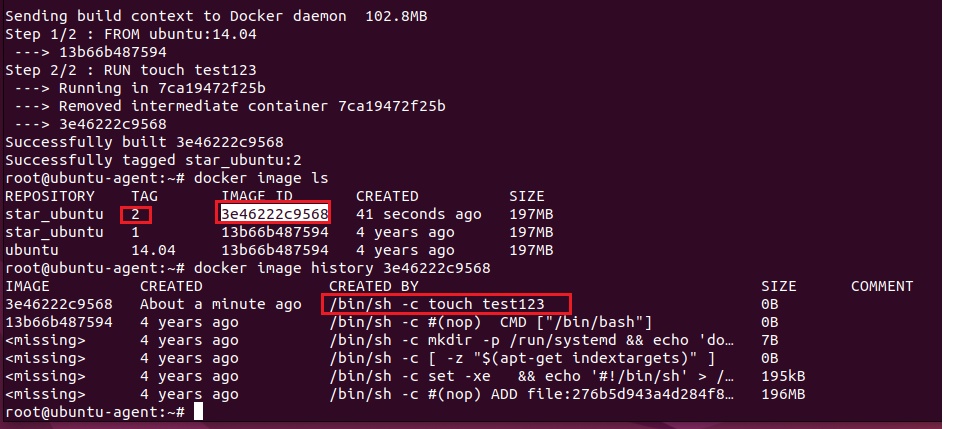

- Create a file test123 in the new custom image of ubuntu to add a new layer.

- Edit Dockerfile:

- $ vim Dockerfile

- FROM ubuntu:14.04

RUN touch test123 (this is a layer where it creates test123 file), save & exit

- Build an image 2:

- Edit Dockerfile:

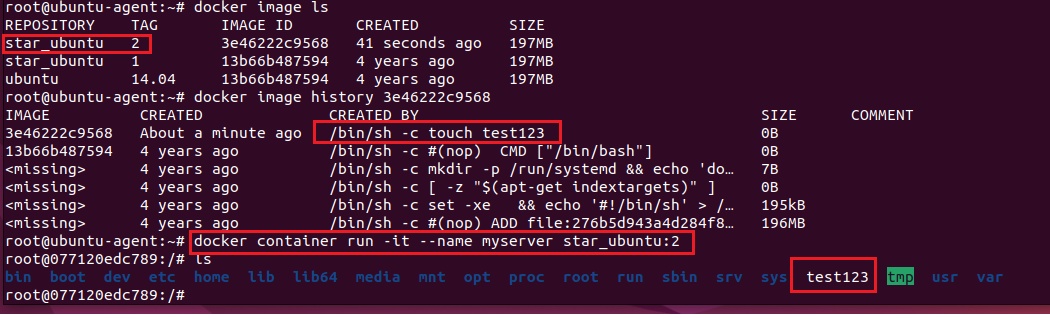

- Create a continer using custom image (star_ubuntu:2):

- Lab:

- Create a custom image of ubuntu:14.04 and run these commands, apt-get update, apt-get install apache2, tree, openssh-server & client, change directory to /html and change index.html, service apache 2 start.

- $ vim Dockerfile

- FROM ubuntu:14.04

RUN touch test123 # create a file test123

RUN apt-get update && apt-get install -y apache2 # update apt repository, install apache2

RUN apt-get install -y tree openssh-server openssh-client #install tree and openssh

RUN cd /var/www/html #change directory

RUN echo "Welcome to Star Distributors " > /var/www/html/index.html #change index.html file #it save the text in index.html

RUN service apache2 start # start apache2 service - save & exit

- FROM ubuntu:14.04

- $ vim Dockerfile

- Build an image 3:

- $ docker image build -t star_ubuntu:3 .

- Create container with image star_ubuntu:3

- $docker container run -it --name webserver1 star_ubuntu:3

- check apache2 service status

- $service apache2 status

- start apache2 service

- $service apache2 restart

- $docker inspect webserver2: it will display the ip

- Access the site

- $curl 172.17.0.2

- Lab:

- Create an image with tag name as per the git commit, after commit to github than commit name should be taken as tag while creating image.

- Boardgame repository is taken for this lab,

- install java and maven and Build the image.

- $docker build -t boardgame:$(git rev-parse --short HEAD) .

- Create an image with tag name as per the date

- root@ubuntu-agent:~# docker build -t ubuntu:release-$(date +%Y-%m-%d) .

- root@ubuntu-agent:~#docker image ls

- REPOSITORY TAG IMAGE ID CREATED SIZE

ubuntu release-2025-05-13 7d0907079ba5 15 seconds ago 211MB

- Delete image:

- $docker rmi imagename

- $docker remove image imagename

- Remove dangling images (imges with <none> name <none> tag

- root@ubuntu-agent:~# docker rmi $(docker images -f "dangling=true" -q)

- Find out how many images created after a particular image:

- docker image ls -f "since=ubuntu:1"

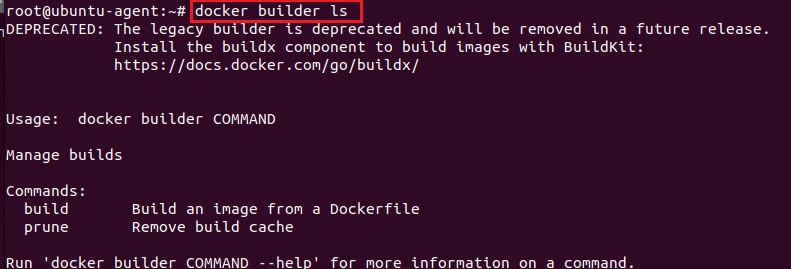

- Multi Architect/Platform Images:

- docker builder is not installed, If you have installed docker desktop than builder is installed.

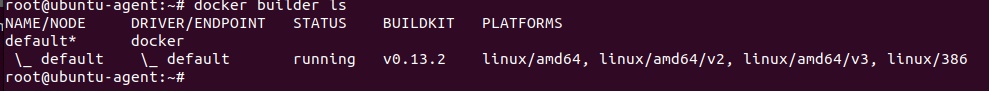

- $docker builder ls

- Install docker buildx:

- $sudo apt install docker-buildx (it is an extension to buildkit)

- if you check all supported linux/amd64 architecture, to support arm64 architecture.

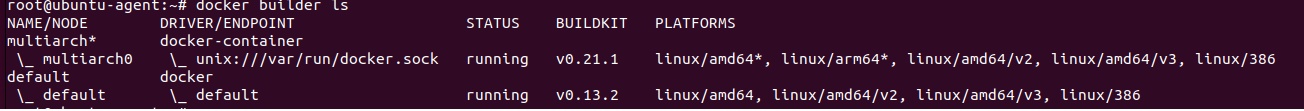

- $docker buildx create --name multiarch --platform linux/amd64,linux/arm64 --driver docker-container --bootstrap --use

- $docker builder ls

- now it support arm64, amd64

- create an image:

- $vim Dockerfile

- FROM ubuntu

CMD ["echo", ubuntu image with multi platform"]

- FROM ubuntu

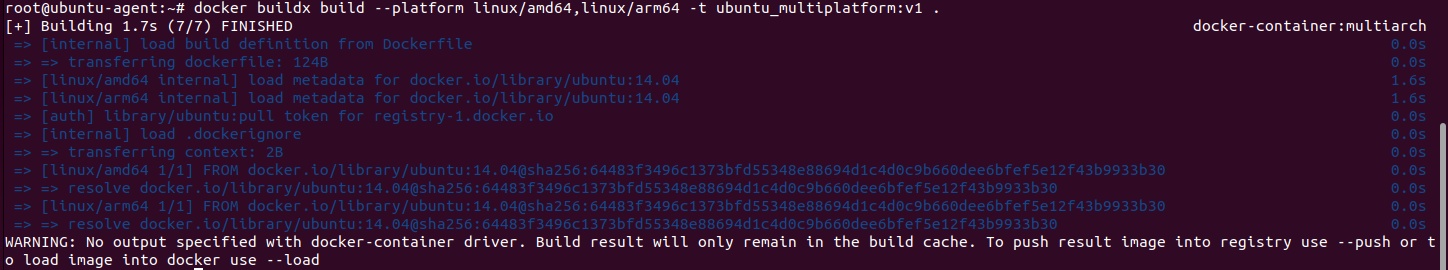

- $docker buildx build --platform linux/amd64,linux/arm64 -t ubuntu_multiplatform:v1 .

- $docker buildx build --platform linux/amd64,linux/arm64 --push -t ubuntu_multiplatform:v1 .

- Error: failed to solve: failed to push ubuntu_multiplatfom:v1: server message: insufficient_scope: authrization failed

- it does not store in local docker, it has to be in docker hub

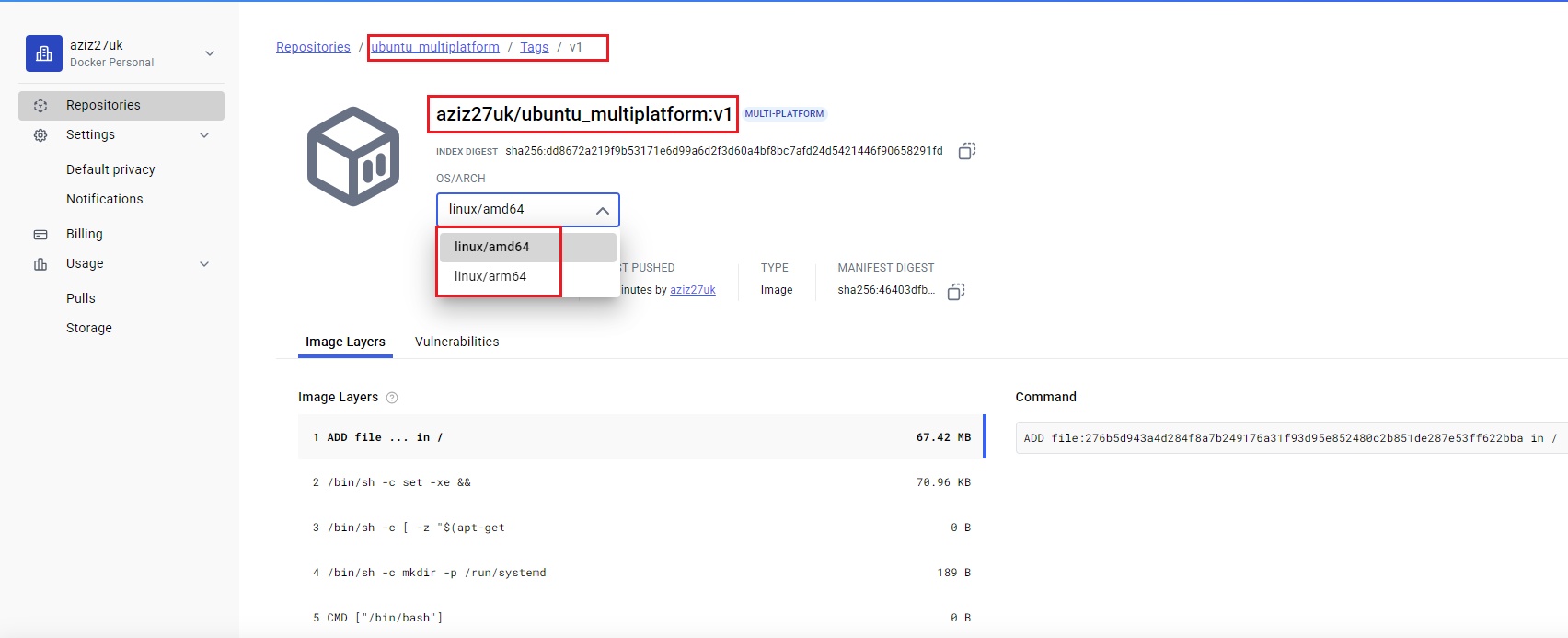

- $docker buildx build --platform linux/amd64,linux/arm64 --push -t aziz27uk/ubuntu_multiplatform:v1 .

- Go to docker hub and check the image:

- $vim Dockerfile

- Test: Create two VMs (amd64 & arm64)

- deploy on both vms

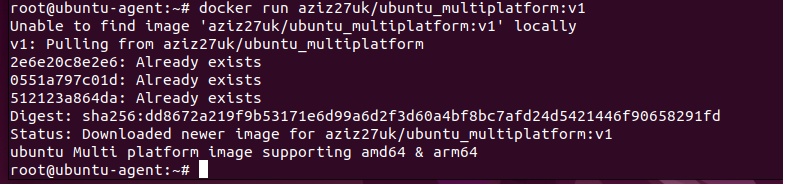

- $docker run aziz27uk/ubuntu_multiplatform:v1

-

- docker builder is not installed, If you have installed docker desktop than builder is installed.

- Image Layers:

- Docker Images are the combination of layers, when you pull an image from docker hub a number of layers downloaded.

- When ou first pull an image in a fresh aws instance a number of layers will be downloaded.

- When you pull second image less number of layers will be downloaded and it goes on further pulls, if layers of first pull image is simlar in second pull image then it will not download in second image and it will only download new layers of second image.

- When you delete first image it will only delete those layers which are not dependant to other images.

Host

- The host o/s on which docker is installed is called docker host.

- code

Client

- Terminal which is used to access docker is called docker client, when you install docker a docker client is also installed and it runs in the background, which is responsible for taking commands and pass it to another background process called daemon.

- code

- code

LAMP Architecture

-

LAMP Architecture:

- LAMP architecture environment can be created for developers who are building a website using open source technologies.

- L = Linux o/s

- A = Application Development using php

- M = backend database should run mySQL

- P = Application server run on Apache Tomcat.

- On Linux machine install mySQL, php and tomcat containers and linked with each other.

LAMP Architecture Lab:

- Step1: Install Linux ubuntu instance

- loginto ubuntu instance and install docker

- Step2: create mySQL container

- Step3: create tomcat container and linked with mySQL

- Step4: create php container and linked with mySQL & tomcat

- #docker run --name myphp -d --link mydb:mysql --link apache:tomcat php:7.2-apache

- #docker container ls (3 containers running)

- LAMP architecture environment can be created for developers who are building a website using open source technologies.

-

code

-

Daemon

- Daemon will analyse the type of command and route it to the following.

- Docker Images:

- Docker Containers:

- Docker Registry: it is where docker images are saved.

- public registry: Maintained by hub.docker.com

- private registry: it is private in nature

- code

Containers: tomcat, ubuntu, nginx, httpd, mysql, jenkins, apache2, python application, SonarQube,

Syntax and parameters used in creating conainers.

- $docker run -it -d -p <hostport>:<container_port> --name <container_name> <image_name> <shell>

- $docker run -it -d -p 8080:80 --name=abc image_name /bin/bash

- parameters:

- -it = interactive terminal, when container is creaed, a terminal of conainer will be opened, terminal depends on image , /bin/bash or sh can be use. if you do not define than container will be created and exited.

- -d = container will run in detach/background.

- -p or -P :

- it is used for port mapping source port and destination port, host port (port of pc/vm) and destination port is container port, outside traffic reach to pc/vm IP address by defining port 8080 which is mapped with container port 80.

- container port cannot be change but source port can be change. If you use captal P than system will define any available port in the sysem, if you use p than you enter the port number. Right hand side port is container port cannot be change, left hand side port is source port which could be any available port.

- port_mapping5.jpg

- Accessing within a VM:

- port_mapping3.jpg

- Accessing from outside VM:

- port_mapping4.jpg

- --name=abc: abc name will be assign to container, if you do not define than docker will assign a random name.

- image_name: enter image name which is use to create conainer, if image is not pulled already or in local repository than it will search in docker repository and download the image.

- /bin/bash or sh: It depends on image that what kind of shell it can give.

- code

Tomcat

- $docker run -it -d -p <hostport>:<container_port> --name <container_name> <image_name> <shell>

- code

- code

Jenkins

- Step 1: #docker pull jenkins/jenkins (The jenkins image has been deprecated for over 2 years in favor of the jenkins/jenkins:lts image provided and maintained by the Jenkins Community as part of the project's release process. Use image:>

docker pull jenkins/jenkins>docker run -p yourportNo:8080 --name=jenkins-master -d jenkins/jenkins) - Step2: #docker run --name myjenkins -p 9090:8080 -d jenkins/jenkins

- Step3: access jenkins: open browser http://publicIP:9090 or in VM if through docker host IP in browser http://192.168.171.100:9090

- Step4: Recover password:

- Get into jenkins and get bash prompt as you are in docker

- #docker exec - it myjenkins bash

- #cat /var/jenkins_home/secrets/initialAdminPassword (password will be displayed)

- You can acces jenkins home directory either mapping jenkins home directory (/var/jenkins_home) to your machine's local file system or

- specify --volume option in the run command (--volume jenkins-data:/var/jenkins_home), can access through terminal or

- Get into jenkins and get bash prompt as you are in docker

- Step 1: #docker pull jenkins/jenkins (The jenkins image has been deprecated for over 2 years in favor of the jenkins/jenkins:lts image provided and maintained by the Jenkins Community as part of the project's release process. Use image:>

ubuntu

- #docker run --name mynginx -P -d ubuntu:14.04

- #docker container ls

- To get into container: #docker exec -it docker_ID /bin/bash

- code

Nginx

- #docker run --name mynginx -P -d nginx (docker will do port mapping of default port to any port >30,000, if you use small p then define port number of your choice)

- creating container and assigning port automatically using -P (capital P) in detach mode and pull image. Port mapping is done for appications which can be access through browser.

- $docker container ls (get port number)

- To get into container: #docker exec -it docker_ID /bin/bash

- Access through browser: http://192.168.171.100:32678 (port mapping has been defined while creating containers, it can be access from outside using host IP address with port number)

- code

httpd

- #docker run --name webserver -P -d httpd

- #docker container ls (take port number)

- To get into container: #docker exec -it docker_ID /bin/bash

- open browser and use with host IP http://192.168.171.100:port_number

- code

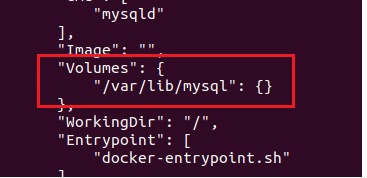

mysql

- It requires environment variable while creating container.

- #docker run --name mysql -d -e MYSQL_ROOT_PASSWORD=India123 mysql:5 (it download the image mysql version 5 and create container)

- To open interactive terminal in bash

- #docker exec -it mysql /bin/bash

- you will be in mysql container and to connect to mysql database

- #mysql -u username -p

- you wil get mysql prompt

- mysql> show databases; (it will show databases information_schema, mysql, performance_schema, sys)

- mysql> use sys;

- create a database (google.com search emp and dept table for mysql (https://justinsomnia.org/2009/04/the-emp-and-dept-tables-for-mysql/) copy code

- paste it so it will create two tables.

- mysql> select * from emp;

- mysql> select * from dept;

- code

Apache2

- Create a ubuntu container in interactive mode and get into container:

- $docker container run -it ubuntu /bin/bash

- update ubuntu:

- root@8b75a0cba7e2:/# apt-get update

- Install apache2 in the container:

- root@8b75a0cba7e2:/# apt-get install apache2

- Go to html folder to make changes in index.html

- root@8b75a0cba7e2:/#cd /var/www/html

- root@8b75a0cba7e2:/var/www/html#echo "Welcome to Star Distributors / Training " > index.html

- Start apache2 service (httpd)

- root@8b75a0cba7e2:/var/www/html#service apache2 start (make sure you are inside container and in html foder)

- Get more details of container with inspect command

- root@ubuntu:~#docker container inspect 8b75a0cba7e2

- Access web site

- root@ubuntu:~#curl https://172.17.0.2

- Try with http

- open browser: http://172.17.0.2

- Error:

- Check resource utilisation by container:

- $docker container top container_ID

- $docker container stats container_ID

- $free -h

- Create a ubuntu container in interactive mode and get into container:

Python application

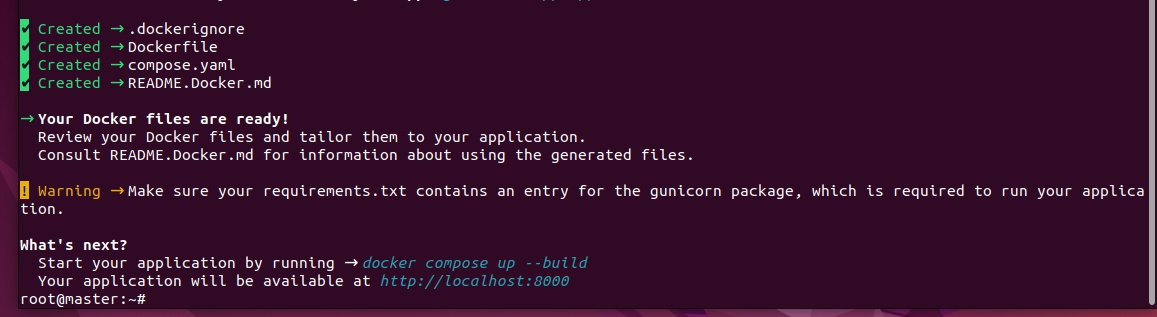

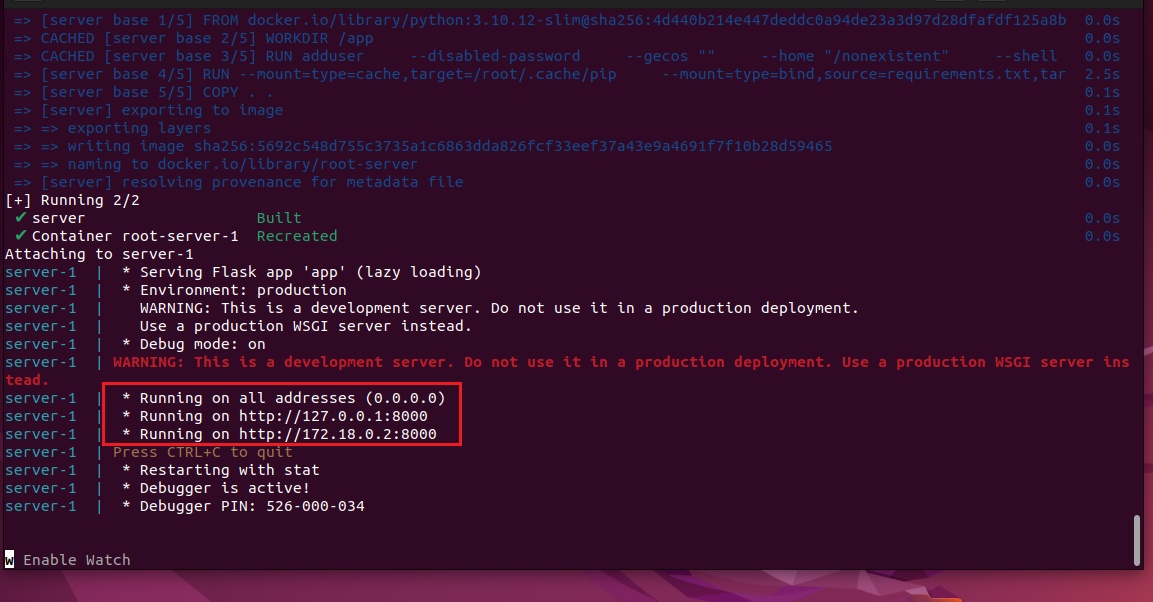

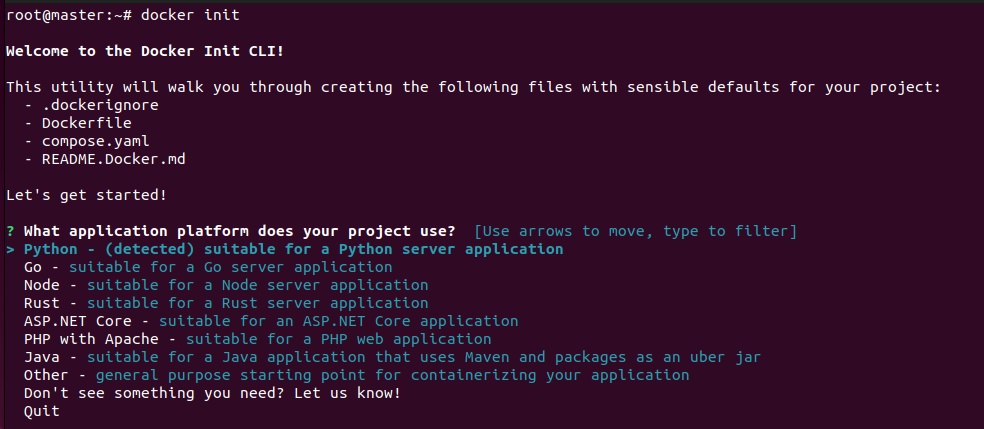

- Following is the python appliction using flask module:

- flask is the dependency which you install in your system:

- $apt install python3-pip

- $pip install flask

Mongo DB

- $docker run -d -p 27017:27017 --name star_mongoDB -e MONGO_INITDB_ROOT_USERNAME=admin -e MONGO_INITDB_ROOT_PASSWORD=123 mongo

SonarQube

- $docker run -itd --name sonarqube-server -p 9000:9000 sonarqube:lts-community

Code

- Code

Code

- Code

Registry: Docker Hub, ECR(Elastic Container Registry of AWS), Push & Pull images,

Docker Public Repository

- hub.docker.com

- username = aziz27uk

- pass : Y......!

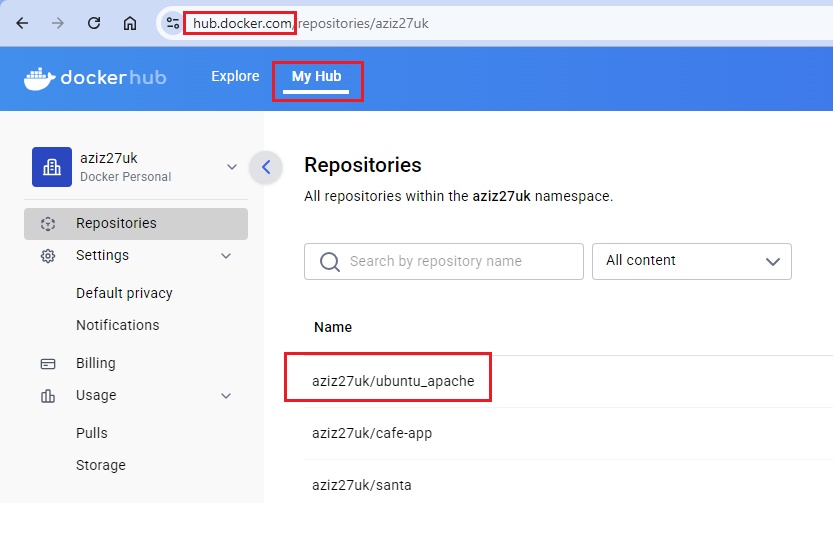

- Lab:

-

Create a customize image from container running ubuntu and install git, create an image from container and uploaded into public registry.

- #docker run --name myubuntu -it ubuntu

- #apt-get update

- #apt-get install git

- #exit

Convert container into an image.

- #docker commit myubuntu aziz27uk/starubuntu (image name should start with userID of docker hub, image name is aziz27uk/starubuntu)

- Image is available in docker host which need to upload into docker hub.

- Login to docker hub from terminal.

- #docker login (enter login name and password)

- #docker push aziz27uk/starubuntu

- login to docker hub and check your images in repositories.

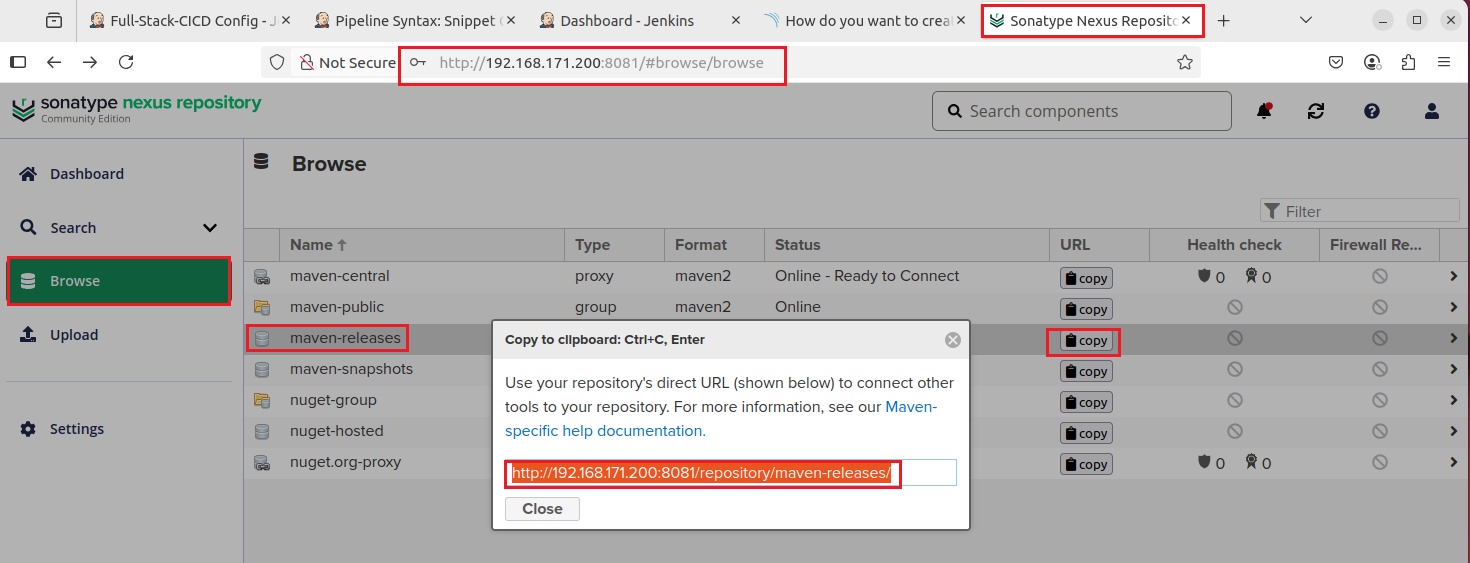

Docker Private Repository

- You create a cutom image with required o/s, applications, plugin, extensions, etc. It can be shared online so that particular person can use and deploy after entering credentials.

- Create an account with docker hub and upload your images. hub.docker.com (username = aziz27uk or aziz27uk@yahoo.co.uk, pass : Y......!

- In private repository users required username and password to enter to download image.

- code

Docker Alpine Repositiry

- In this repository images are in very low in size whereas original size of official images are very big.

- code

- code

Upload image and deploy from Docker Registry

- Create a image

- Exporting container which creates .tar file

- root@ubuntu-agent:~# docker contaiener export 0fea384b2d41 > abc1.tar (instead of > you can use -o )

- root@ubuntu-agent:~# docker contaiener export 0fea384b2d41 -o abc2.tar

- root@ubuntu-agent:~#ls (abc1.tar and abc3.tar created).

- Create image by importing the .tar file and deploy.

- root@ubuntu-agent:~#docker image import abc1.tar starimage1 (image with the name starimage1 will be created)

- root@ubuntu-agent:~# docker image ls

- root@ubuntu-agent:~# docker container run -it starimage1 /bin/bash

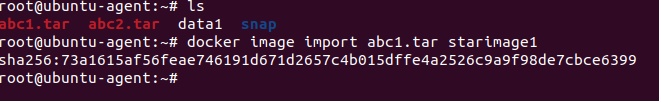

- Create tag for the image: tag name should be unique, create with name used to create account with docker hub (aziz27uk)

- $docker tag starimage1 aziz27uk/ubuntu_apache

- $docker image ls (check tag is applied)

- Upload image(starimage1)

- $docker image push star/ubuntu_apache (public by default)

- Error: request acces to resource is denied

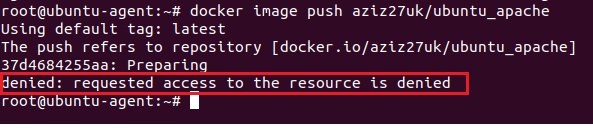

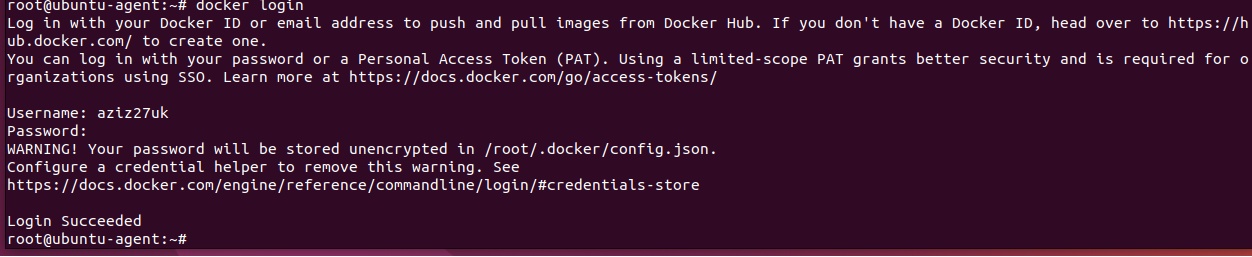

- Login to Docker Hub:

- $docker login (enter credentials)

- $docker image push star/ubuntu_apache

- image pushed, check in docker hub

- Deploy container using custom image (aziz27uk/ubuntu_apache)

- root@ubuntu-agent:~# docker container run -it -name webserver aziz27uk/ubuntu_apache:latest /bin/bash

- first it will check the local repository, if image is not found than it will check docker hub.

- data1 folder was created before image is created.

- Exporting container which creates .tar file

- code

- code

- Create a image

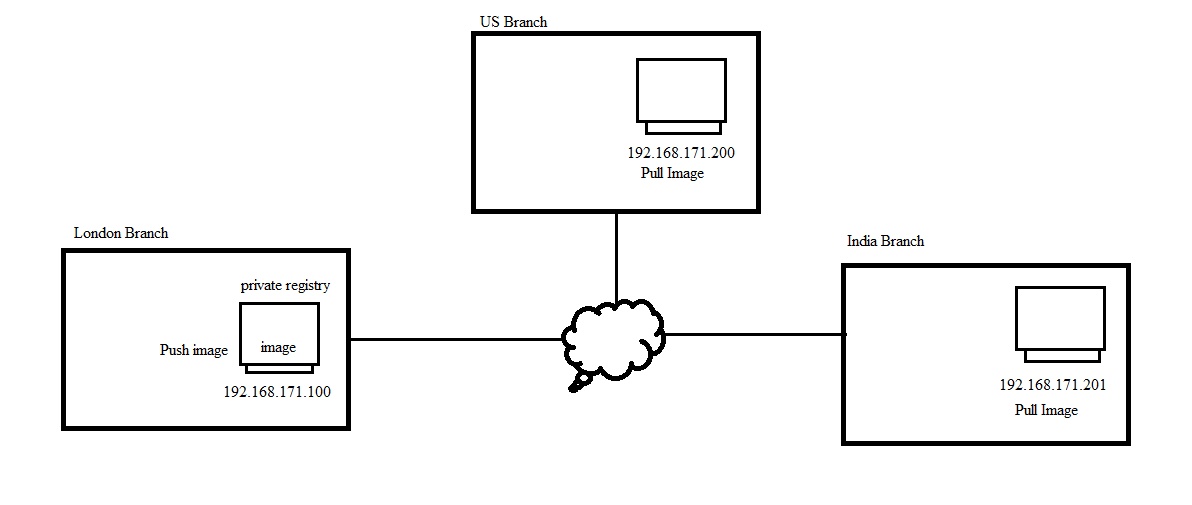

Own Private Registry at on-prem

- Instead of using Docker reigstry to upload and share images, you can create your own registry on one of the computer and share with other branches of the company. Other branches can access with the public IP address.

- Lab:

- Create a VM

- on cloud / on prem

- Install docker

- $apt-get update

- $sudo su - (root user access)

- $sudo apt install docker.io

- Pull docker registry image from docker hub

- $docker image pull registry

- $docker image pull nginx

- $docker image pull ubuntu

- $docker image ls (3 images: registry, nginx, ubuntu)

- $docker container ls

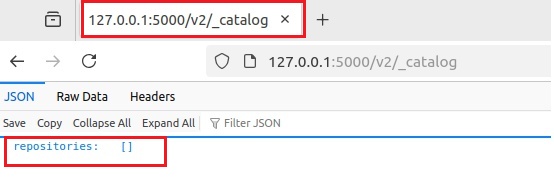

- Deploy registry image (registry works at 5000 port)

- root@ubuntu-agent:~# docker container run -itd -p 5000:5000 --name star_registry registry

- root@ubuntu-agent:~#docker container ls

- check any images in the private registry:

- $root@ubuntu-agent:~#apt-get install elinks

- open browser and run http://127.0.0.1:5000/v2/_catalog

- There is no images in the registry.

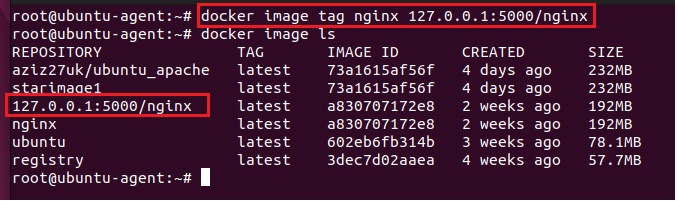

- Push image nginx to private registry:

- root@ubuntu-agent:~# docker image tag nginx 127.0.0.1:5000/nginx

- root@ubuntu-agent:~#docker image ls (it will show all images including tagged one)

- Now push the tagged registry into private repository

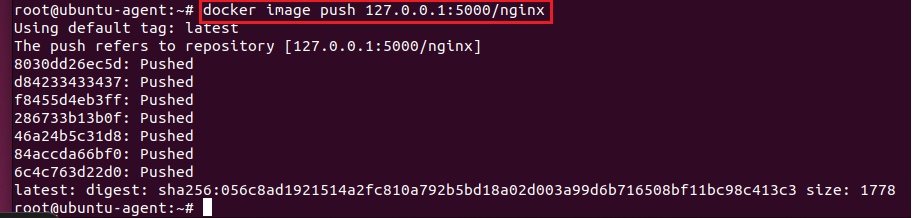

- root@ubuntu-agent:~#docker push image 127.0.0.1:5000/nginx

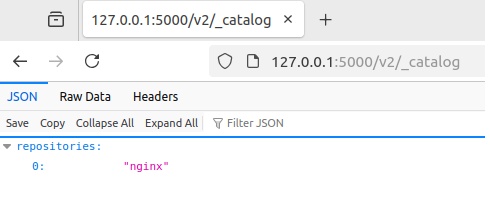

- open browser and run http://127.0.0.1:5000/v2/_catalog

- nginx image has been pushed to private registry.

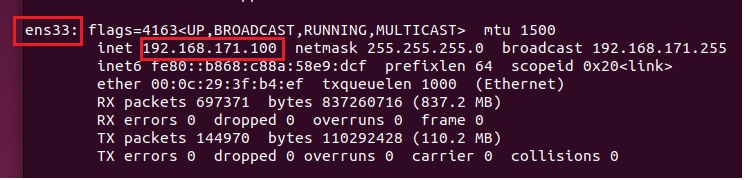

- VM's IP address is 192.168.171.100 or public IP address of VM

- root@ubuntu-agent:~#ifconfig

- Tag it:

- root@ubuntu-agent:~# docker image tag nginx 192.168.171.100:5000/nginx

- Push it to local repository:

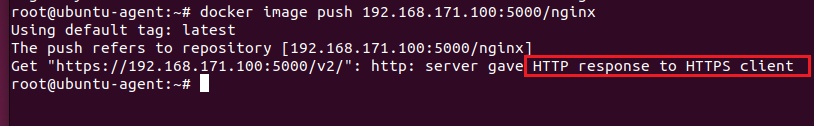

- root@ubuntu-agent:~#docker image push 192.168.171.100:5000/nginx

- The above interface accept secure https only, either bypass/skip https or install certificate.

- bypass/skip http:

- root@ubuntu-agent:~#vim daemon.json

-

{"insecure-registries" : ["192.168.171.100:5000"]}

- save the file and exit

- copy the file to /etc/docker

- root@ubuntu-agent:~#cp daemon.json /etc/docker

- restart docker host:

- root@ubuntu-agent:~#systemctl restart docker

- docker registry container get stopped, start it

- root@ubuntu-agent:~#docker container start container_ID

- root@ubuntu-agent:~#docker image push 192.168.171.100:5000/nginx

- it shows layer is already exist, due to earlier attempt

- try with other image

- check in the browser of different VMs:

- install certificate for HTTPS:

- create certificate:

- root@ubuntu-agent:~#mkdir certificate

- root@ubuntu-agent:~# openssl req -new -newkey rsa:2048 -nodes -keyout -sha256 -keyout cert/domain.key -x509 -days 365 -out cert/domain.crt

- Country Name (2 letter code) [xx]: enter

- state of province name (full name) []: enter

- locality time (ex, city) [Default city]: enter

- Organization Name (ex, company) [Default Company Ltd]: enter

- Organizational Unit Name (ex, section) []: enter

- Common Name (eg, your name or your server's hostname []: repo.docker.local

- Email Address: []: enter

- cd /etc/docker

- mkdir cert.d

- cd cert.d

- mkdir repo.docker.local:5000

- cd

- cd cert/

- cp domain.crt /etc/docker/cert.d/repo.docker.local\:5000/ca.crt

- cd

- systemctl restart docker

- root@ubuntu-agent:~# docker container run -d -p 5000:5000 --name secure_registry -v $(pwd)/cert/:/cert -e REGISTRY_HTTP_TLS_CERTIFICATE=/cert/domain.crt -e REGISTRY_HTTP_TLS_KEY=/cert/domain.key registry

- root@ubuntu-agent:~# docker image tag nginx repo.docker.local:5000/nginx

- docker image ls

- root@ubuntu-agent:~# docker image push repo.docker.local:5000/nginx

- Error: private_registry12.jpg

- Edit /etc/hosts and add 192.168.171.100 repo.docker.local

- root@ubuntu-agent:~# docker image push repo.docker.local:5000/nginx

- code

- code

ECR - Elastic Container Registry (AWS)

- Create a Ubuntu VM and install Docker.

- $sudo apt update

- $sudo apt install docker.io (Install docker from local repository)

- Run hello-world to check docker installation:

- $docker pull hello-world (only root user can access docker, assign permission to user)

- When you install docker a group is created with the name docker, whoever added to this group can run the docker commands, by default root user is added.

- When you create a user a group is also created with the same name of user, this group is primary group to this user. you can add additional group to this user

- Add user (abdul) to docker group:

- $sudo usermod -aG docker abdul (-a is used to assign as a secondary group, -A is use for primary group, G is used for group, docker is group, abdul is user), log off and log on. or run $newgrp docker (it will add user to docker)

- Start docker Service:

- $systemctl start docker or

- $sudo service docker start

- $systemctl enable docker

- $docker info

- Check docker status:

- $sudo docker service status

- Login to AWS and search Elastic Container Registry:

- search elastic container registry.

- create a repository.

- Repository name:

- 565393050355.dkr.ecr.eu-west-2.amazonaws.com/star_ecr (565393050355.dkr.ecr.eu-west-2.amazonaws.com = namespace, star_ecr = repository name).

- Encryption configuration:

- AES-256: Industry standard Advanced Encryption Standard (AES) encryption

- AWS KMS: AWS Key Management Service (KMS)

- Create

- ecr1.jpg

- click star_ecr to check images in this repository:

- ecr2.jpg

- click view push commands to get commnads to push the images to this repository from docker.

- ecr3.jpg

- First need to install AWS CLI to give the credentials of aWS on machine from where you push the commands.

- $curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" click for url.

- $unzip awscliv2.zip

- $sudo ./aws/install

- $aws configure

- It is not a best practice to push and pull images to repository using root user. create a user and give permission and perform task.

- Create a user:

- Go to IAM>user>create a user, enter name and click Provide user access to the AWS Management Console, select i want to ceate IAM user. Custom password, next

- By default this user added to Admins group, Assign policy so that this user can push / pull images from private repository =

- click attach policies directly and choose Amazon EC2ContainerRegistryFullAccess.

- click create.

- Click on created user and go to security credentials>Access keys> select command line interface (CLI)>next>create access key and copy both access and secret key.

- Login to AWS Console with CLI:

- $aws configure

- AWS Access key ID: AKIAYHJANJLZRSJXLAVY

- AWS Secret Access key: EJ6oegC3e2O2ZnaCF9L/1T4lcJVemC1o16kPvSLUZ

- Default Region Name: eu-west-2

- Default output format: table

- Now copy the command and paste it.

- Retrive an authentication token and authenticate your Docker client to your registry.

- aws ecr get-login-password --region eu-west-2 | docker login --username AWS --password-stdin 565393050355.dkr.ecr.eu-west-2.amazonaws.com

- Error: An error occurred (InvalidSignatureException) when calling the GetAuthorizationToken operation: Signature not yet current: 20250601T172510Z is still later than 20250601T123631Z (20250601T122131Z + 15 min.)

Error: Cannot perform an interactive login from a non TTY device - Solution: sudo apt-get install ntp, sudo service ntp start

- aws ecr get-login-password --region eu-west-2 | docker login --username AWS --password-stdin 565393050355.dkr.ecr.eu-west-2.amazonaws.com

- Login Succeeded.

- $docker images (list of images )

- secret_santa:v1

- Create tag for the image:

- $docker tag secret_santa:v1 565393050355.dkr.ecr.eu-west-2.amazonaws.com/star_ecr:latest

- $docker images

- 565393050355.dkr.ecr.eu-west-2.amazonaws.com/star_ecr:latest

- Push image to ECR private repository:

- $docker push 565393050355.dkr.ecr.eu-west-2.amazonaws.com/star_ecr:latest (with this tag docker will push image to AWS private registry)

- Check in the AWS ecr images

- Pull the image from ECR private registry:

- $aws configure list (check credetials added)

- copy the url from AWS ecr image: 565393050355.dkr.ecr.eu-west-2.amazonaws.com/star_ecr:latest

- $docker pull <aws_account_id>.dkr.ecr.<region>.amazonaws.com/<repository_name>:<tag>

- $docker pull 565393050355.dkr.ecr.eu-west-2.amazonaws.com/star_ecr:latest

- Create the container using the AWS ECR private registry image:

- $docker run -d --name star_secretsanta -p 8081:8080 565393050355.dkr.ecr.eu-west-2.amazonaws.com/star_ecr:latest

- Create a Ubuntu VM and install Docker.

code

- code

- code

- code

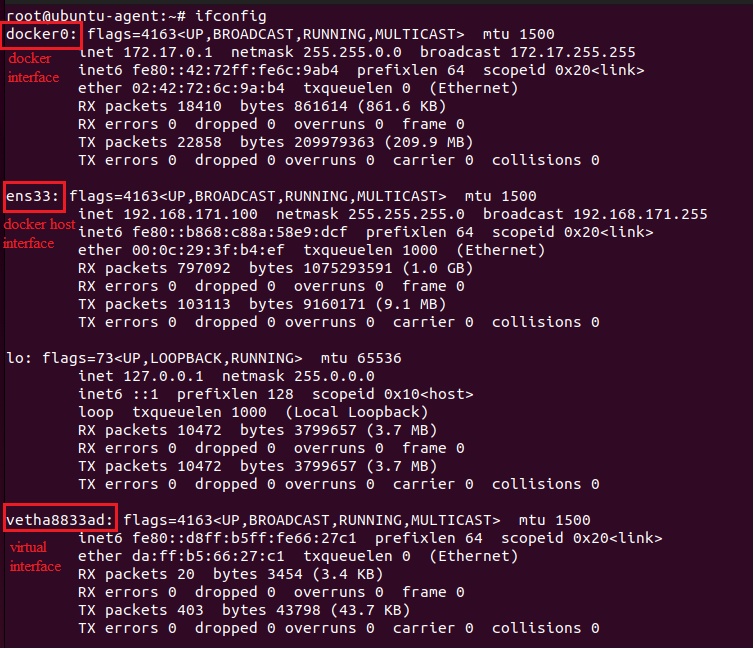

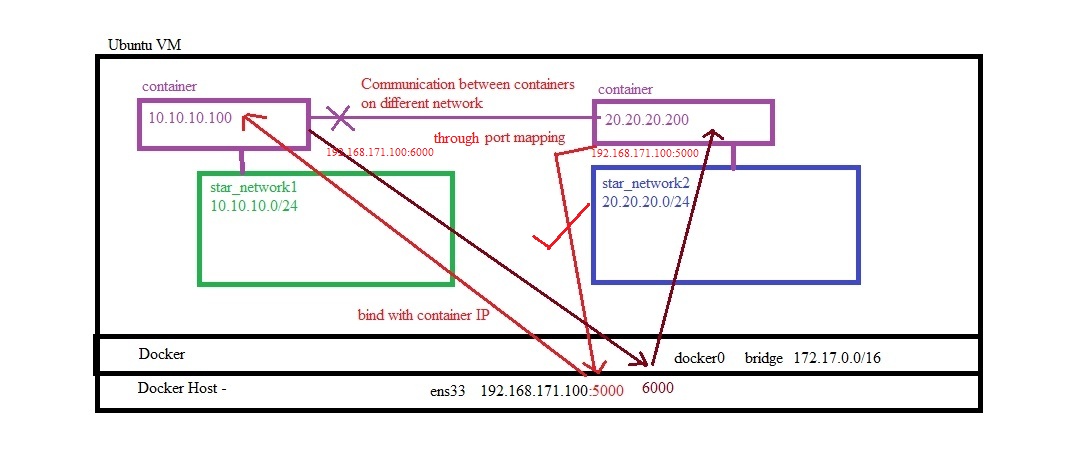

Network Host & Container

Host network, docker network, container network

- Host / Docker host Networking:

- Windows 11 is installed on a PC which has got IP address 10.50.10.186 from network.

- VMWare workstation is installed and configured NAT in virtual network editor with CIDR 192.168.171.0 /24, VM's created will be assign IP address from this range and all VMs will have internet as NAT is configured.

- A VM is created and has got an ip address: 192.168.171.106. 255.255.255.0, G/W 192.168.171.2

- When you install a docker on a VM, it creates a docker0 interface and assign an IP address 172.17.0.1 from CIDR range 172.17.0.0/16 which is linked with host network.

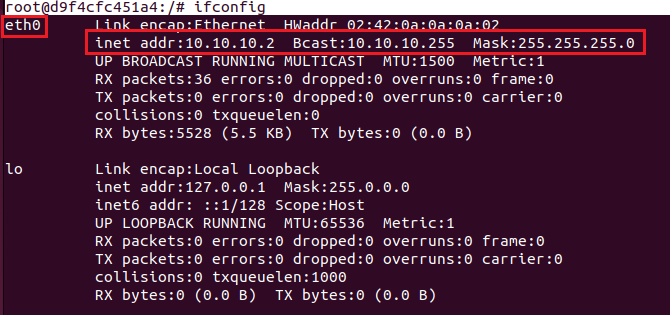

- Docker installed on VM is called docker host and it has a different private/public IP address (ex.,192.168.171.100)

- This VM will have interface eth0 or ens33 and has IP address 192.168.171.100

- $ifconfig or

- $ip a

- docker0 is the network interface of docker

- ens33 is the docker host/ubuntu interface

- vetha8833ad is container network interface assigned by docker bridge.

- IPtable:

- save IPtable in a file.

- $iptables-save > iptable1

- $cat iptable1

- # Generated by iptables-save v1.8.10 (nf_tables) on Tue Oct 21 12:54:05 2025

*raw

:PREROUTING ACCEPT [10833:34270208]

:OUTPUT ACCEPT [0:0]

-A PREROUTING -d 172.17.0.2/32 ! -i docker0 -j DROP

-A PREROUTING -d 172.17.0.3/32 ! -i docker0 -j DROP

-A PREROUTING -d 172.17.0.4/32 ! -i docker0 -j DROP

COMMIT

# Completed on Tue Oct 21 12:54:05 2025

# Generated by iptables-save v1.8.10 (nf_tables) on Tue Oct 21 12:54:05 2025

*filter

:INPUT ACCEPT [0:0]

:FORWARD DROP [0:0]

:OUTPUT ACCEPT [0:0]

:DOCKER - [0:0]

:DOCKER-BRIDGE - [0:0]

:DOCKER-CT - [0:0]

:DOCKER-FORWARD - [0:0]

:DOCKER-ISOLATION-STAGE-1 - [0:0]

:DOCKER-ISOLATION-STAGE-2 - [0:0]

:DOCKER-USER - [0:0]

-A FORWARD -j DOCKER-USER

-A FORWARD -j DOCKER-FORWARD

-A DOCKER ! -i docker0 -o docker0 -j DROP

-A DOCKER-BRIDGE -o docker0 -j DOCKER

-A DOCKER-CT -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A DOCKER-FORWARD -j DOCKER-CT

-A DOCKER-FORWARD -j DOCKER-ISOLATION-STAGE-1

-A DOCKER-FORWARD -j DOCKER-BRIDGE

-A DOCKER-FORWARD -i docker0 -j ACCEPT

-A DOCKER-ISOLATION-STAGE-1 -i docker0 ! -o docker0 -j DOCKER-ISOLATION-STAGE-2

-A DOCKER-ISOLATION-STAGE-2 -o docker0 -j DROP

COMMIT

# Completed on Tue Oct 21 12:54:05 2025

# Generated by iptables-save v1.8.10 (nf_tables) on Tue Oct 21 12:54:05 2025

*nat

:PREROUTING ACCEPT [1921:353679]

:INPUT ACCEPT [0:0]

:OUTPUT ACCEPT [1106:77649]

:POSTROUTING ACCEPT [1106:77649]

:DOCKER - [0:0]

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

-A DOCKER -i docker0 -j RETURN

COMMIT

# Completed on Tue Oct 21 12:54:05 2025 - explanation:

- -A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE: (MASQUERADE = NAT), it perform the natting for CIDR 172.17.0.0/16 for interface docker0

- Docker Networking:

- Docker creates the following network:

- $docker network ls

- root@docker:~# docker network ls

NETWORK ID NAME DRIVER SCOPE

3cbea76b9050 bridge bridge local

2043aee75972 host host local

aa07fab46e70 none null local

- root@docker:~# docker network ls

- Find out how many bridge network in linux system:

- $brctl show

- Container Network Drivers: The following are network drivers in container netowrks.

- bridge

- host

- MacVlan

- IPVlan: Breaking large network into small networks

- none

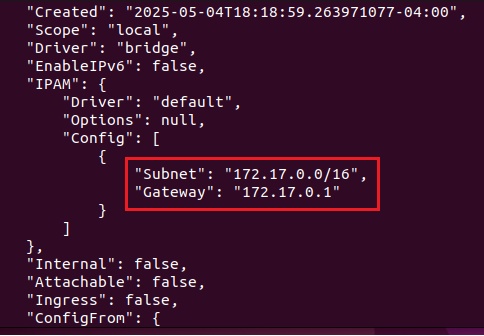

- bridge:

- After docker installation a bridge network created.

- All containers network by default assicate with bridge network. Any containers created will get by default IP address and virtual adapter from bridge network.

- bridge creates isolated network for containers.

- It act as a virtual switch (SDN Switch = Software Define Network Switch), it is non-manageable switch where you cannot do routing & switching.

- It provides virtual adapter and IP addresses to containers from default CIDR range 172.17.0.0/16

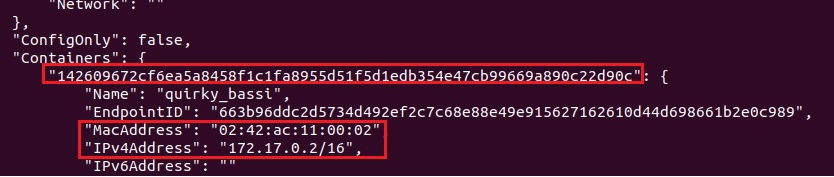

- $docker inspect bridge: It provides network details of bridge and containers

- subnet of bridge

- $docker inspect bridge | grep -i subnet

- "Subnet": "172.17.0.0/16"

- containers IP address assigned.

- Lab: containers of docker host attached to same bridge can communicate and have internet access

- $docker run -it --name=web1 alpine

- $docker run -it --name=web2 alpine

- $docker inspect web1

- $docker inspect web2 (take ip address)

- Login to web1

- $docker exec -it web1 sh (alpine does not have bash terminal)

- #ping 172.17.0.3 (pinging)

- Outbound traffic rule: Outbound traffic is allowed from outside.

- #ping google.com (pinging)

- #ip route

- default via 172.17.0.1 dev eth0

172.17.0.0/16 dev eth0 scope link src 172.17.0. - default via 172.17.0.1 is the gateway through which traffic goes out.

- This gateway connects container with host machine.

- Inbound traffic rule: inbound is restricted from outside

- When you access the container within this network you can connect directly with container IP address (from 172.17.0.0/16 range)

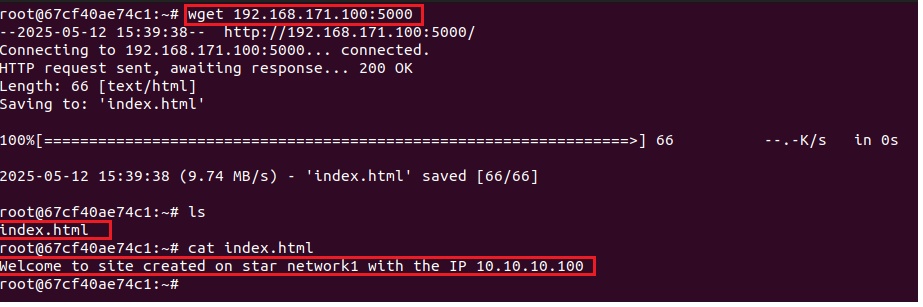

- If need to connect from outside than you hit docker host IP address 192.168.171.103 and it is mapped with container IP address.

- If you have multiple containers and need to access from outside than docker host IP is already mapped with a container, so you configure port map and access from outside.

- Perform port configuration where a port number is bind with container IP, to access container from outside the network you hit docker host IP with port number and it will connect you to container

- Container:

- Each container should have ip address so that it can communicate others.

- After container is created a virtual interface and ip address is assgined by bridge.

- Container Public IP: bridge uses NAT and linked with host machine, so host IP will act as public IP of container. container IP will translate wot host IP to access outside netowork.

- Virtual Interface:

- It makes communication between docker host and container.

- It is visible only when container is in running.

Container, IP, hostname, DNS, Route

- Get container IP address:

- $docker inspect --format='{{.NetworkSettings.IPAddress}}' web3 (web3 is container name, you can use container ID)